Why It’s So Difficult To Change People’s Minds

Belief Networks, Echo Chambers, And Perverse Social Incentives

1. Introduction

I am not the first person to recognize how futile it usually is to argue with someone else who believes in a different ideology. The Story of Us blog series by WaitButWhy gives a lot of aesthetic visuals, text, and metaphors for describing the current state of political discussion in the modern world, but it fails to describe the epistemological reasons why people are so strongly inclined to not change their minds when they disagree with others. This essay is an attempt to describe what that series and most other works have failed to recognize.

Anyone can prattle nonsense, and they’ll always be able to find people to believe it, especially if they can dress it up in superstitious flummery. Careful reasoning and experience of the world are needed. – Wang Chong

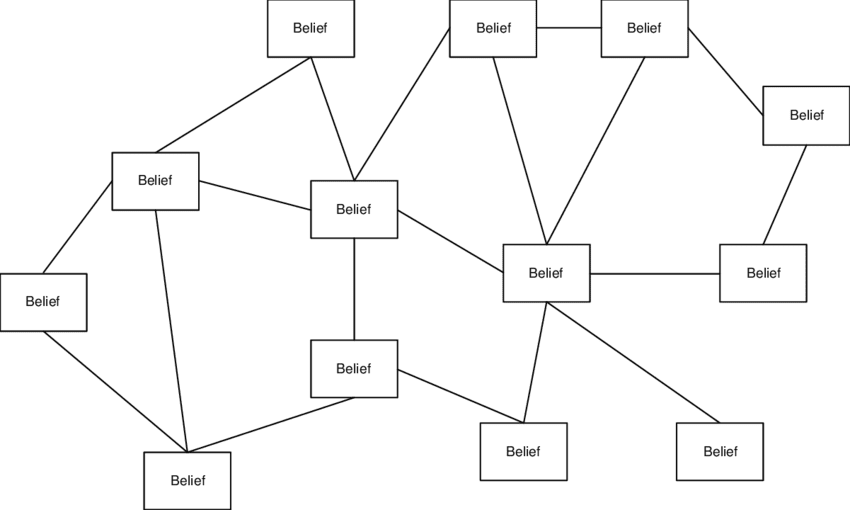

Visually, we can theoretically use four different graphs for understanding ideology:

- Value Hierarchy Graphs

- Belief Network Graphs

- Word/Language Cloud Graphs (with each word’s font size being proportional to its relative frequency in the believer’s vocabulary corpus)

- Sociograms: Social Network Graphs

We will talk more about each of these.

I will add more content to this webpage when I have time.

2. Core Values and Theories of Value

Values and theories of values will often be the most central hubs of a person’s belief network. There is ultimately no a priori justification for anything, so this creates a lot of diversity for potential values and theories of value. A value can be self-affirming after you have it, but one cannot judge a value without a value. For that reason, all the core values in a theory of value tend to support each other, and they imply other lower levels of value that also cohere to each other.

Belief networks are structured around theories of value. Both the value theory (or value graph) and the belief network (or belief map) can be graphed visually. If one can be convinced to change their theory of value, then all the beliefs within their belief network that are dependent on the values within their theory will no longer be supported.

Often times, people will believe what they want to believe, not what is true. In such cases, people may project their value graphs onto reality. In doing so, they fail to understand what reality is really like. Likewise, people will disagree on political changes when one side benefits them more than the other. Even if one side is clearly better for society as a whole, it can be difficult for individuals to understand that when they have so much to lose if the other side seizes power.

Generally speaking, culture determines the most popular values, beliefs, and arguments, not rational thought.

3. How Coherentism and Belief Networks Work

3.1. Introduction To Coherentism

Main Article: Resolving the Munchhausen Trilemma and How Coherentism Works.

The strive and curiosity for knowledge is largely driven by the strive for or seeking coherence, which is the strive for a representative model of reality that is as accurate as can be. A belief network is a collection of beliefs that form a subjective model of reality. They have similarities to argument maps, except that that they’re more comprehensive and they include much more than just arguments. (Note that these are not to be confused with Bayesian Networks, which are also often called belief networks.)

The brain probably goes through some sort of algorithm where every time it sees a pattern that reaffirms the perceived consistency and correctness in its beliefs, the brain reaffirms its beliefs and self-justifies that it is the one that is correct. The exact opposite happens and lots of the doubting emotion surges if the brain perceives many contradictions in its beliefs and thinking.

Most people have something analogous to ChatGPT in their brains, which generates socially acceptable speech in context, but they don’t really have any deep understanding of anything. When people are reading the articles, literature, and arguments spread within their respective echo chambers, they are essentially training the ChatGPT corpus inside their brains to respond with similar answers.

3.2. Modeling Belief Networks As Hub-And-Spoke Diagrams

3.2.1. Belief Networks

We have many other beliefs that we believe in that are all connected in similar networks, besides just ideological ones. The beliefs within the ideological belief system are a subset of one’s entire belief system, that is their entire mental model of reality from the perspective of them as a subject.

Everybody has a different coherentist belief network for everything that they believe in. Hence, debating is mostly pointless, because everybody thinks that they’re right since every person has strong coherence within their belief networks. People can occasionally persuade people with different beliefs in the belief networks of their interlocutors, but this has a very low probability of succeeding since the coherentism within every person’s belief network gives everybody a strong sense that they are right and everybody else is wrong. For each topic, the belief network(s) that a person may have corresponds to that person’s cognitive level.

The goal of debating is to attack each and all of the nodes in the opponent’s belief network, but more so to change the belief networks of the audience members.

3.2.2. Drawing Belief Maps

One of the most important strengths of drawing a belief network as a graph is that it allows us to trace the path of justification when a believer backs up a belief. For example, if I argue against an ideologue, who has a very strong coherence in their beliefs, we may notice that when they hear an argument A, argument B, argument C, etc. for opposing their ideology, all their rebuttals follow a pattern(s) around the belief network graph that gives us a better idea of where to start dismantling their belief network.

A person’s understanding of other people’s belief networks is also a part of their belief network. If someone has strawman beliefs about someone else’s belief network, then that is a barrier to their understanding the other person’s beliefs, which in turn shapes their own beliefs.

The main drawback to drawing complete belief maps is that it is very difficult and time-consuming to show all of a person’s ideological beliefs, especially if they have dozens or even hundreds of beliefs with hundreds of connections between them. Since a person may have thousands or millions of beliefs, belief maps are most useful when they only show the ones that are relevant for understanding the psychology of an ideologue.

When people outline their arguments and premises, propositions, and conclusion format, what they are doing is that they are taking different notes from their belief network, and saying them in an explicit format instead of an implicit one. However, these textual belief networks have the limitation that they don’t always list all of the assumed implicit premises and selective attention biases that the believer used to reach their conclusion(s).

Often times, when people construct their own Internet Wikis or Encyclopedias (e.g. Wikipedia, Conservapedia, RatWiki, Metapedia, etc), the encyclopedia writers are just constructing articles that show the maps and details linking all of their beliefs together. Another example would be how this entire website and all its links illustrate what Zero Contradictions’ belief network looks like, aside from any out-of-date content or external links that mention beliefs that he disagrees with (but are otherwise mostly good).

3.2.3. Changing Other People’s Belief Networks

When people criticize opposing ideologies, they will often have one argument that they think is a silver bullet that they can utter that will automatically win the debate in their favor. Sometimes this actually does happen, and it’s easier to happen the more ridiculous the opposing person’s claim is. When the supposed silver bullet doesn’t convince the other person that they’re wrong (and there is no censorship to shut the debate down), what usually ensues is a lengthy debate where the opposing ideologist recites other beliefs connected to the belief node that is under attack. This makes it challenging to refute even a single belief, because the debaters aren’t just attacking a single belief, they’re attacking the entire network of beliefs that all back up and support that one belief node.

When people convert to new ideologies, it can be gradual process if they’re slowly discarding beliefs from their former worldview (they’re removing the spokes within their belief network), or a really rapid process where a multitude of values and/or beliefs are discarded all at once when a particular value or belief is proven wrong or contradictory or when they choose to adopt a new theory of value (one of the central hubs of the belief network). Perhaps even a new ideology is introduced, although this part will often be a lengthy process since it usually takes time to adapt and get used to a new ideology.

For faster results, it is more efficient to attack the hubs of an ideological belief system rather than the spokes, when the persuader is reasonably certain that the core values and beliefs in a believer’s belief network can be disproven in one fell swoop. Unfortunately, this is usually harder to do because the hubs have many, many connections. You’ll almost never move someone to change their opinion by claiming and explaining their entire worldview is wrong. Most people will push back when pushed, so it’s usually a more successful strategy to introduce attack the “spoke” beliefs and spur them to discover the rest themselves.

We can think of the relative worth of dismantling other people’s beliefs in terms of a cost benefit analysis. If you manage to dismantle one of the beliefs that are relatively more well-connected than other ideas, then there is a greater reward for doing so, but at the same time, it can also be more difficult because the greater number of connections to that central belief make it very difficult to let go of. Conversely, attacking more minor beliefs could be easier since they have your connections to the other beliefs, but there is also a smaller payoff for disproving them due to their fewer connections.

Generally speaking, there is never a “right” way to dismantle someone else’s belief system because every person’s beliefs are different. It’s usually better to start with the spoke beliefs, or one can start with the hub beliefs if they’re really confident and they know the other person’s belief network well. They both have different payoffs that tend to correlate proportionally to the number of collections that they have, so the best beliefs to refute are the ones that you think you will have the best shot at.

It seems that people with fewer critical thinking skills tend to rely almost exclusively on pointing out contradictions in ideas that they don’t like, when they choose to criticize ideas. Higher-level thought would involve giving more comprehensive arguments why other people’s ideas are wrong. People who seek to convert other people’s belief networks generally aren’t interested in changing their own views because: 1. they already have strong confidence / coherence in their beliefs if they think that they can convert other people, and 2. people want to be in the front of social movements.

Dogmatic people won’t change their minds if they realize that one of their arguments is refuted. Instead, they will switch arguments. That’s a good indicator of a bad faith debate that they have a fixed conclusion. A rational person would put forth what they think is their most persuasive argument first. If they lost an argument and they’re good faith, they’ll say: “okay, you beat my best argument(s). I have to rethink what I believe.” If that one(s) is defeated, they won’t just switch to a weaker one, unless they’re genuinely curious about how the opponent would respond to it.

3.2.4. Belief Maps And Doxastic Logic

Doxastic Logic is a type of logic concerned with reasoning about beliefs. Some time, I should investigate it further to see what kinds of discoveries (if any) could be made if doxastic logic was used to reason about belief networks. Until then, I can only describe belief networks in terms of propositional logic, which I already know.

When drawing the directed edges between all the different nodes in someone’s belief network, we can label each of these edges with boolean operations, because the combination of these beliefs and the format of these boolean operations is equivalent to when people give formal arguments and explicitly list out the propositions that they used to reach their conclusions. If the directed edges do not have any boolean operations on them, then that indicates that one belief implies the belief in front of the arrow.

If there is a directed edge from belief1 to belief2 and a directed edge from belief2 to belief1, then that probably indicates that the relationship between those two beliefs (or two propositions, if we think of them as propositions instead) is a biconditional.

For example, if (A ^ B ^ C) -> D, then (~A v ~B v ~C) could make D undecided, but we would need software that can convey a 3-to-1 edge connection from A, B, and C to D.

3.3. When Belief Networks Break Down

3.3.1. How People React

When someone has a fallacious and/or inconsistent belief system, finding different ways to prove the same conclusion is essential for helping the necessary amount of contradictions build up in the believer’s mind, so that they can ultimately reject their previous, fallacious beliefs. People believe in whatever is coherent with the rest of the beliefs that they believe in, so if they’re able to recognize enough contradictions between things that they definitively believe in and things that they less definitively believe in, they will eventually abandon the less definitive beliefs in order to stabilize their structure of beliefs to a point where they perceive their beliefs to be stable once again, whether or not they actually are or not in reality.

When people ask questions about things that they are unsure of relating to their own ideologies to other believers of the ideology, it is typically because they are seeking coherence for the ideology regarding one of the weaker nodes of their belief network. these moments are often great times to strike at the ideology and expose contradictions within the belief system, if there are any. The rejection or further propagation of the ideology depends on its ability to generate coherent, sounding explanations for times like these where coherent is lacking.

We have already established that in order to validly believe anything there needs to be at least some evidence, but regarding the need for coherence which causes ideologues to often come up with crazy ideas in order to increase the coherence of their ideological belief systems, a lot of ideologues for site, their belief systems, and what they have observed as the evidence in favor of those crazy ideas. This reveals that whatever a believer considered “evidence” is any fragment beliefs of their beliefs systems what should happen forms, according to their life experiences as a subject and process. Evidence seems to be nearly synonymous with beliefs.

If people are particularly dogmatic, they’ll even resort to question evasion tactics, which help them feel like they still have coherence, if they’re too ideologically motivated to concede that they’re wrong.

One of the reasons why I like to write FAQs pages for addressing people’s questions is that I believe from my own personal experiences that they are a really effective way to address multiple spoke beliefs of an individual’s belief system at once.

3.3.2. Apophrenia and Confirmation Bias

A lot of people are aware of what confirmation bias is, but almost no one understands why it occurs, (since most people are ignorant of epistemology). Since coherence is necessary in order to form strong belief systems, this causes ideologues to make up bad ideas that aren’t grounded in reality in order to increase the coherence of what they believe and prevent them from recognizing how their ideologies contradict reality.

Since most people are unaware that belief systems are structured to coherentism, every time they see or hear something that they perceive to reaffirm their belief system, they only interpret what they have seen as more evidence for believing that they are right. They aren’t aware that Ideologies cause people to look for patterns that conform with their beliefs, instead of patterns that contradict their beliefs. Every person has an ideological filter that causes them to interpret events according to their ideology, instead of what the events are actual signs of.

Example: The 2022 Buffalo Shooting

- Conservatives pointed out how: 1. the shooter’s manifesto stated that he was always authoritarian left-leaning, 2. that he did the attack to provoke lawmakers to past more gun control laws that would supposedly encourage white people to fight for their rights, 3. they interpreted his race realism facts and study citations as racist pseudoscience (mainly because most people never got to see the manifesto since it was censored), etc.

- Leftists used the manifesto as evidence that white supremacy is a serious issue, despite how remarkably rare incidents like these are. And many leftists lied by saying that the shooter was a conservative (even though the manifesto says that he was left-leaning), etc.

Ideologies cause people to look for patterns that conform with their beliefs, instead of patterns that contradict their beliefs. Every person has an ideological filter that causes them to interpret events according to their ideology, instead of what the events are actual signs of.

3.3.3. Why Ideologues Come Up With Ridiculous Ideas

In order for memes and ideologies to reproduce themselves, they need to have strong links that reinforce the other ideas inside the believers’ belief systems, and crazy, ridiculous ideas can do just that. Some examples:

- Thomas Aquinas came up with his five arguments in favor of God because it was a way to increase the coherence of the Christian belief system, which is why the arguments gained a lot of popularity.

- How many Democrats believe that Republicans want Voter ID laws because they want to prevent minorities from voting, even though the real reason is that Republicans are concerned with voter fraud.

- How leftists will insist that conservatives are anti-immigration only because they are racist, when they’re actually concerned with crimes committed by illegal immigrants, and they believe that the country’s resources and welfare should be preserved only for the country’s citizens.

- How many far-leftists believe that right-wingers want people to have more children only to “create more wage slaves and feed Capitalism’s need for endless growth”.

- Many hard-core Communists claim that all the information about North Korea being a dictatorship instead of a democracy was fabricated by western and mainstream media outlets. Example. This belief is effective for reinforcing the belief in Communism because it gives the believers a reason to easily discredit the vast majority of information out there.

- How Libertarians came up with Cornucopianism to compensate with their belief that population control and other governmental measures are not necessary to regulate the population, even though it makes no historical or biology sense and greatly overestimates the potential of technology.

- This Ancap who came up with the conspiracy theory that the world’s governments want to depopulate the Earth as an explanation for why bad events are happening (from his POV).

- Many people instinctively reject race realism because they don’t know any better, and due to how memetics tends to work.

- Being taught all sorts of great things about individualism and independence is a factor that leads people (particularly Libertarians) to naïvely reject race realism because in theory everybody should be equal in an ideal individualist world.

- Some leftists think that that conservatives want to ban abortion because they “want to control women’s bodies”, when the real reason is that conservatives believe that unborn infants have a right to life.

- Creationists who insist that dinosaur bones were constructed and planted in the ground to provide evidence for evolution.

- These Efilists who assumed that Blithering Genius was a religious person and “pretends everyone else doesn’t have feelings” since it’s easier for them to believe that, since actually confronting his arguments against Efilism would weaken the coherence of their belief systems.

Interestingly, many of the craziest, most delusional lies tend to be brainstormed when political ideologues and utopian ideologists are trying to brainstorm propositions that they believe to be true (even if there’s little to no evidence regarding the actual truth) in order to maintain the coherency of whatever crazy, ridiculous nonsense that they believe.

3.4. Software For Mapping Belief Networks

3.5. Examples of Opposing Ideologies For Demonstrating How Belief Networks Work

3.5.1. Religious Versus Atheist Beliefs

Pro-life people frequently repeat that there are many contraceptives available, so there should be no excuse if a woman got pregnant from consensual sex. They repeat this frequently, because this justification is a major node within their belief network for opposing the legality of abortions.

[This section is unfinished]

3.5.2. Georgist Versus Ancap Beliefs

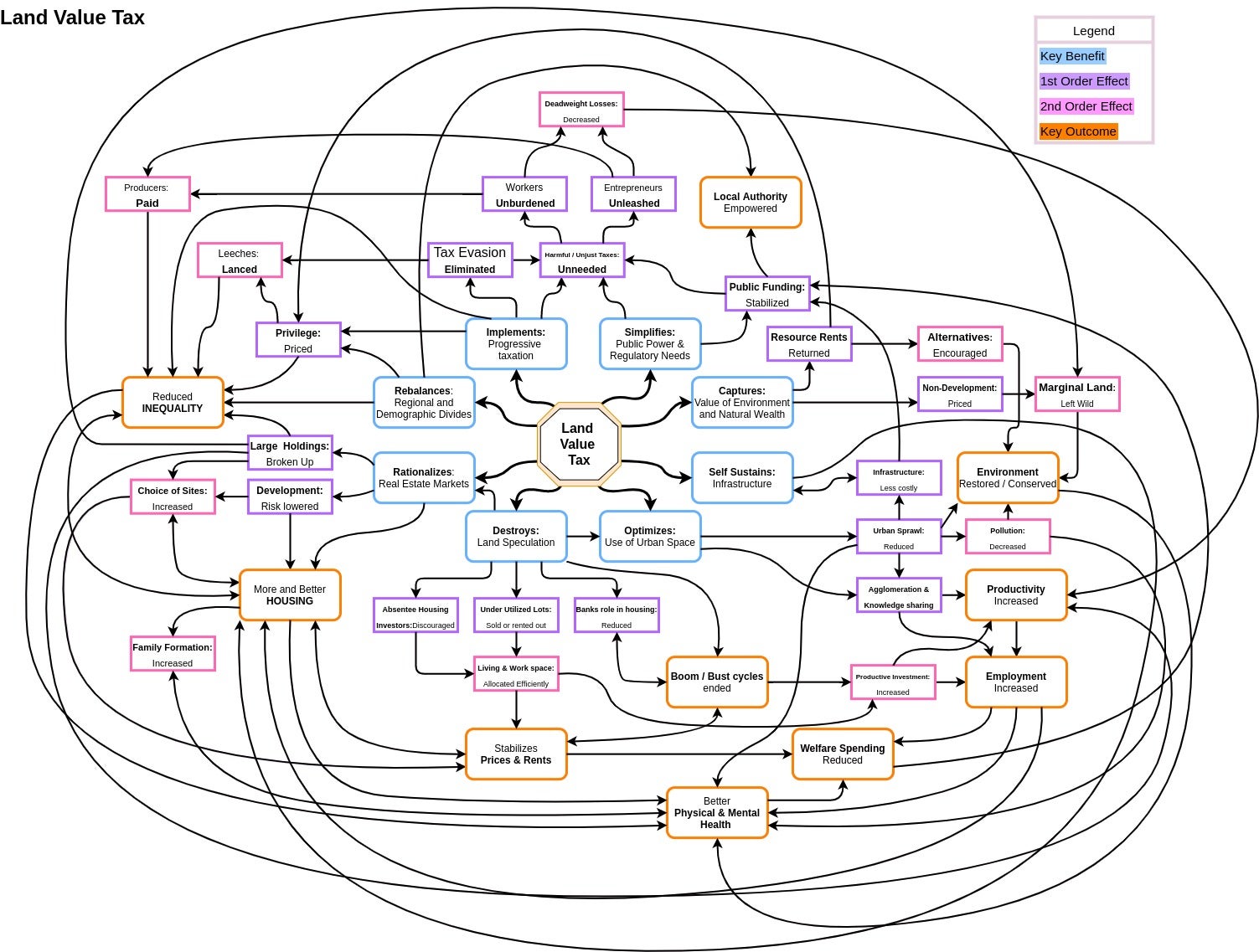

This image shows the following belief map for why Georgists believe that Land Value Tax would strongly benefit the economy:

Georgists also have a second belief map of beliefs and arguments for why they believe Land Value Tax is justified, which has many connected nodes to the belief map show above.

[This section is unfinished]

Read More: The Georgist Theory of Property Versus the OATP.

3.5.3. Conspiracy Theory Beliefs

For a brief period (5 months), I believed in the 9/11 Controlled Demolition Conspiracy Theory, after someone introduced it to me. Specifically, I was shown some videos on the Physics & Reason. This YouTube channel mainly shows physics experiments and arguments why its creator (Jonathan Cole) believes that the Twin Towers of the World Trade Center were destroyed on 9/11 by controlled demolitions, instead of plane crashes, fires, and gravity. I watched all the videos on the channel, and I was able to understand all the physics presented, so it made the controlled demolition conspiracy theory seem compelling to me for a while. I had also never seen anyone refute the arguments made on the channel, so it seemed that most of the arguments against the physics of the controlled demolition hypothesis either ignored the arguments made or were strawman fallacies. The selective information that I was shown created a frame of assumptions, that I needed to reject or question in order to reject the likelihood of the conspiracy theory.

However, I was unaware that the channel was showing very selective arguments for controlled demolitions. I finally changed my mind and rejected the controlled demolition hypothesis after I watched the WTC Debate Chris Mohr vs Richard Gage. Chris Mohr was an investigative journalist who had read thousands of pages from the official NIST report on 9/11, had called and talked to many expert physicists, scientists, controlled demolitionists, etc. During the debate, Mohr made arguments against many of the problems of the controlled demolition hypothesis, such as: how many of the eyewitness accounts contradicted each other, how there was a consensus on the smell of jet fuel in the building, how ground zero was cold enough for rescue workers to walk on the rubble before WTC 7 collapsed, how the towers were 95% air by volume; the sheer force, mass, and speed of the falling top block; how the squibs below the planes crashes were comparable to air escaping from tire punctures, how the iron spheres may had been developed during the towers’ construction, how most of the concrete in the floors could’ve pulverized each other as they fell on top of one another, how the supposed controlled demolition would’ve been among the most sloppy controlled demolitions ever, how the WTC towers were among the most secure buildings in the entire world, how office workers would’ve seen, smelled, heard, or otherwise noticed explosives being installed in a building that was occupied 24/7, etc. Notably, the Square-Cube Law came up at 2:11:15, and it’s a major reason why the experiments shown on Physics & Reason can never perfectly simulate what actually happened on 9/11.

In an article that Chris Mohr wrote:

The video of that debate is not being released ([Richard Gage’s] own website admitted that twice as many people changed their minds in my direction as his during the debate).

From the audio recording that I listened to, I would say that Chris Mohr definitely won the debate, and I think it’s very telling that Richard Gage doesn’t want to release the video of the debate. The audio recording video that I watched in particular was edited to show URLs on the screen to blog posts and papers where 911 Truthers made counter-arguments against Mohr’s claims. I haven’t looked into them, and I probably won’t, because I’m satisfied with Mohr’s answers. Fortunately, I was able to find the debate recording because unlike most conspiracy theorists, I was looking for reasons why I am wrong instead of reasons why I thought I was right.

One reason why 9/11 conspiracy theorists find their conspiracy theories so compelling is that many of them believe they have Pascal’s Mugging on their side.

3.5.4. Randian Objectivism Belief Network Map

Objectivism Belief Network Map: This image shows the standard belief map for Randian Objectivists. Source. Note that although this image / pdf was created to present what Objectivists claim to believe, it doesn’t accurately model their beliefs the same way a proper belief map should. Objectivists aren’t aware that knowledge is coherentist, not foundationalist. Contrary to what they claim, they’re wrong that all of their knowledge can be derived from just three axioms. A true belief network wouldn’t derive all of its beliefs from a limited set of beliefs, because that’s not how human minds work. Hence, a proper discrete graph representation of their beliefs as a coherentist belief map would feature cells with statements that would describe the implicit things that they believe and misunderstand due to their blindspots.

[This section is unfinished]

4. How Heuristics Affect Different People’s Ideologies

Main Article: Rationality and Freedom.

4.1. Selective Attention, Selective Ignorance, And Recognizing Contradictions

Consciousness can be thought of as will and awareness. If one expands, the other detracts. Attention is the concentration of awareness on some phenomenon to the exclusion of other stimuli. Selective Attention is an individual’s limited capacity to choose what they pay attention to and what they ignore.

As we shall see in the videos below, humans have limited attention spans, so it’s always possible for someone to sneak details into our thoughts without us noticing.

- Leading Questions - Yes Prime Minister

- Selective Attention Test 1: Cups Game

- Selective Attention Test 2: Passing The Basketball

- Selective Attention Test 3: The Door Study

Everybody and every ideology has a different network of facts stored in their minds that they consider to be true. Nearly all these stored mental facts were cherry-picked to a great degree to fit their existing belief systems, rather than to challenge them. Selective attention causes implicit premises and contradictions to go undetected. Something that might be a contradiction in one belief network may not be a contradiction in another belief network and vice versa.

Belief networks can make things that are contradictions in reality seem like they are not contradictions within the believer’s worldview. And they can also make things that aren’t contradictions in reality seem like they are contradictions. It may be particularly difficult to catch circular reasoning fallacies since all the statements in the fallacy give coherence to each other, albeit in an illegitimate way.

4.2. Selective Attention And Blind Spots Shape Belief Networks

Selective attention and ignorance make it harder for people to realize holes in their belief networks. Ignorance of implicit assumptions and contradictions is just as important to belief networks as the belief nodes themselves, but this is harder to realize because belief maps often don’t tend to have explicit nodes for their selective ignorance of contradictions.

If the awareness of implicit assumptions or the ignorance of some contradictions had never occurred, then many of the core belief network hubs and nodes never would’ve appeared in the first place. When belief maps are created, it is important to keep separate notes or a separate diagram, explicitly detailing how the believers’ stream of sensory input, selective attention, implicit assumptions, and thought process played out. If we are unaware of the believer’s implicit assumptions and how their selective attention is directed, then we won’t be able to figure out how all the beliefs in the belief map formed in the first place. Blindspots affect how beliefs are formed.

For example, many Anarcho-Communists have a node in their belief network that if people don’t work under Capitalism, then they will die. But if they were to draw belief maps representing their own beliefs, they wouldn’t think to add a node or note to the diagram that they’re ignoring that literally every economic ideology requires people to work, lest they die. People are guaranteed to die on a deserted island if they don’t work to find food, water, and shelter.

As another example, Alloidal Libertarians joke about who will build the roads, but they’re implicitly assuming that car-centric urban planning is the best when they say this, even though it definitely isn’t. Alloidal Libertarians are focused on the wrong solutions to achieving the best public transportation, and their ignorance of good urban planning practices is a major reason why they fail to understand Georgism. Alloidal Libertarians are clueless about urban planning because they don’t recognize the grave economic, political, and environmental importance of it, and it’s not a topic that is usually discussed in Libertarians circles to any significant extent, except for when they’re complaining about government changes that made urban planning worse.

The main reason why exposure to other people with different ideologies/beliefs is important is mainly because it enables us to focus our attention on beliefs that may have been ignored by our selective ignorance / attention. To a somewhat lesser extent, it can also expose us to different logic/reasoning that can improve the correspondence of our belief networks with reality.

Individuals that have less selective attention and fewer gaps in their attention are more likely to have higher intelligence. Selective attention is one of the key reasons why people with above average IQ can potentially have ideas that really brilliant people never thought of, especially if they had more time to think about some of the things that lead to their more original ideas.

5. How Echo Chambers Work

5.1. How People Get Their Information

Pre-Reading: Social Delusions.

Most people’s belief network are nowadays influenced by their social networks: online websites like Youtube, Twitter, Reddit, Facebook, the Internet in general, whatever influential books they’ve read, their friends, their own personal experiences, and whatever conclusions they make up on their own from all of that and their life experiences. Each person’s social network is usually an echo chamber or bubble, since most people prefer information that conforms with their own narratives, instead of what is true of reality.

The dominant, collective moral narrative of each online network selects for whatever information conforms best with the moral narrative of the users using the network. Within these online networks, people usually promote articles with misleading headlines, as long as the headlines fit their narrative, even if they haven’t actually read the article and if reading the article wouldn’t actually support (and might even contradict) their narrative. In normie circles, people often parrot sentences and sound bites that supposedly highlight/expose contradictions in their opponents’ beliefs, whether they actually do that or not. This is a type of low-level thinking.

Wikipedia: Market Segmentation.

The most urgent problems of our times are the ones that cannot be discussed. Hence, echo chambers don’t tend to discuss the world’s most problems (or at least all/most of them).

The perception of unbiased thought varies depending on perspective. You just need to show arguments from the other side and address them, even if they’re not the strongest arguments that the other side has.

5.3. Censorship, Strawmen, And The Ideological Turing Test

The censorship of ideas is another important factor at play for making it more difficult to change people’s minds. As long as alternative beliefs are never mentioned and those things are kept out of consciousness, people are never going to even think about heretical ideas. Inside many of these networks, they will hear the worst strawmen constructions of the other side, so they will typically respond with fallacious rebuttals to heretical ideas if they do hear them. Many of the arguments that people hear about the other sides inside their social networks are N/A arguments.

There are many people who wouldn’t know how to refute arguments against their belief system if they stepped outside of their ideological bubbles and encountered criticisms from other people who disagree with them. And for other topics/ideologies, they will only be familiar with the strawman version of those opposing belief systems. This is why most ideologists fail the Ideologial Turing Test, a test analogous to a kind of Turing test: instead of judging whether a chatbot had accurately imitated a person, the test judges whether a person can accurately state the views of ideological opponents to the opponents’ satisfaction.

Smokescreens, and the propagation of misleading statistics are also reasons why it’s so difficult to change people’s minds. A lot of stuff is often intentionally taken out of context, whether that be for quotes or video.

Read More: George Floyd and the Madness of Crowds - Blithering Genius.

Read More: Smokescreens - Brittonic Memetics.

5.4. Every Ideology Has Their Own Explanation Why They’re Not More Popular

Every ideology has their own narrative for why their beliefs are not more popular, whether it’s true or not. If they didn’t, this would be a major source of incoherence that would break many of the connections inside the ideology’s belief network, and that incoherence would pose a threat to the ideology’s propagation and continued existence.

- If you ask Communists, they will say that the Capitalist system and Pro-Western propaganda have brainwashed everybody into supporting Capitalism and wage slavery, and the CIA is responsible for overthrowing foreign attempts to establish Communism in other countries during the Cold War, supposedly because it is in the interest of the Capitalist system, and not for geopolitical interests.

- If you ask Ancaps, they will say that it is because the government brainwashed everybody into believing in state of them with the help of the public education system.

- If you ask Efilists, they will say that it is because everybody’s DNA or biological code programmed everybody to ignore all the suffering in life, embrace Natalism, and ignore Antinatalist, Pro-Mortalist, and Efilist thinking. And they are actually right that life is wired to some extent to be Natalist, but they are wrong that DNA is the puppeteer, and the organism is the puppet. Once again, this is the rhetoric of exploitation, it’s not even true that DNA contains all the biological programming for organisms since the cell is the correct medium of inheritance.

- If you ask Feminists, they will say that the Patriarchy is so well entrenched into the mainstream culture that it causes everybody to reject Feminism and the so-called equality of the sexes.

- If you ask Objectivists, they will say that explicitly pro-selfishness preaching of Objectivism is unattractive to the modern, altruistic and irrational culture. They will also that Ayn Rand has been strawmanned, misrepresented, and demonized. They may further say that because a majority of the world is religious, the atheist beliefs of Objectivism are unappealing to most people.

The exception to this rule is when someone strongly believes in a very mainstream ideology. In which case, they would have a node in their belief network that their belief network is the most popular or one of the most popular ideologies because it’s the most correct. While this is basically an Appeal to the People Fallacy, most people find it reassuring when other people (especially a majority) agree with their beliefs.

Another observation is that almost every ideology assumes that its ideal is natural and stable, and thus would exist without some perturbing force that knocked us out of that state. But this is only an assumption that has not been sufficiently confronted by sound reasoning and reality itself.

6. Most People’s Identities Are Strongly Connected To Their Ideologies

Main Article: Utopian Ideologies.

- Ideologies are selected based on their ability to propagate themselves, not based on reason. For that reason, ideologies tend to make people very emotional because emotions are better for creating action, which is the propagation of said memes in this case.

- For many people, their ideology makes up so much of their personality, that if their ideology was taken away from them, they wouldn’t have any or much personality left.

- In many cases, if a person leaves their ideology, then they also lose many friends and important nodes of their social network, which disincentivizes people from wanting to discard their current belief systems.

- Most ideologues strive for social status and moral purity instead of reason. These typically involve ideological purity contests and other stupid games over who truly has the moral high ground.

8. The Sapir-Whorf Effect Affects People’s Subconscious Thoughts

Main Article: Linguistic Relativity and Thesaurus Arguments.

Language (words and definitions in particular) have the potential to influence people’s thoughts, and people won’t realize when language is influencing their thoughts when they’re being classically conditioned subconsciously and when their selective attention isn’t focusing on this manipulation. This is one of the root causes of false equivocation fallacies.

9. Most People Never Bother To Think About Epistemology

Generally speaking, people want philosophy to affirm their existing assumptions, not challenge them. So, some people treat philosophy as a rationalization game, not as a quest. Most people also don’t understand what “rationality” is, so they don’t understand what it means to be rational.

Since knowledge is formed as models of subjective experience of reality, truth and knowledge are both relative. A lot of the disagreements that happen between people can be attributed to how people have more informative models of reality than other people. Most people also never seriously think about the origin behind where their beliefs come from, or how they can verify that their beliefs are correct. Presuppositions are necessary to think, so that makes it difficult for people to question them.

Additionally, many people think that reason alone would reach their ideologies as the correct conclusion or truth about reality, but that is wrong because: 1. there is no such thing as pure reason, or reason that isn’t constrained by sensory input and limited knowledge about reality, 2. there is no ultimate grounding for reality. Reason is not sufficient for deriving morality either.

9.1. Why Historical Philosophical Progress Has Been So Slow

Generally speaking, Philosophy has not been able to keep up with Science. Philosophers are still debating if there is a God or no God, one world or multiple worlds, free will or determinism, which theory of truth is the best, and so on and so forth. There are several reasons why it has been so historically difficult to create a rational, consistent philosophy that is free from contradictions:

- Most of human nature has been focused only on survival (and reproduction). There simply wasn’t any time nor need to brainstorm a rationalist philosophy from scratch since the primal instinct to survive and reproduce was the foremost and only necessary concern to primitive humans. It is only until relatively recently in modern history that humans have developed a strong need for an implicit or preferably explicit philosophy.

- Every human’s knowledge begins from an essentially clean slate.

- People often get stuck asking the wrong philosophical questions.

- Since knowledge accumulated by older/previous humans cannot be automatically passed to newer generations, every new human’s best chance at forming a coherent, rationalist philosophy is to read the works of older/previous/other humans and build from there, assuming that they have the desire to do so and aren’t preoccupied with their own survival.

- A majority of humans are indoctrinated to believe in unreasonable things, such as religion. Religion doesn’t spread through expanding rationality, instead it spreads through some combination of conquest, proselytization, child indoctrination, fearmongering, and the prioritization of faith over reason.

- Humans are evolutionarily designed to focus on their own experiences and perspectives first and foremost. So even if someone creates a philosophy that is based on nothing but one’s sensory input and reasoning, it is not guaranteed to be consistent with what reality is outside of their sensory experiences and reasoning.

With that being said, if one wishes to be rational, it’s clearly not enough to live life only according to what someone has learned from their sensory input and experiences, and to never question one’s own intuitions and worldview.

9.2. The History Of Epistemology

- Humans evolved from the Great Apes.

- Humans adapted to use tools and primitive technologies to enhance their survivals. This implies the use of knowledge to some extent.

- Humans had thousands and thousands of primitive religions, many of them were polytheistic.

- Civilizations arose. A portion of humanity’s modern knowledge erupted.

- A lot of nonsensical philosophies also developed (e.g. Classical Elements of Greece, China, etc., Eastern philosophies, the world’s modern most populous religions).

- Knowledge and new technologies continue to develop, but Hinduism, Buddhism, Christianity, Islam, and other religions continue to be humanity’s most dominant forms of philosophical thought.

- The Enlightenment, Scientific Revolution, and Industrial Revolution pioneered the way for reason to become more ingrained into Western culture, and people formed more rational ideas about the world.

- Today in the present, religions, inaccurate philosophies, ill-thought-out politics, and especially Humanism are unfortunately still very influential in the world.

A crucial principle often overlooked in contemporary education: original thinking requires periods of intellectual solitude. The mind that is constantly stimulated by external input never develops the capacity for the kind of sustained internal reflection that produces genuinely new ideas.

– Michael S. Rose, Substack Note

9.3. People Disagree On What Counts As An Argument Or A Fallacy

Most people don’t understand and can’t identify fallacies like: False Equivocations, Post Hoc Ergo Propter Hoc, Appeal to Authority, Correlation & Causation, etc. The failure to recognize fallacies extends beyond that though.

- People conflate value knowledge with sensory knowledge. Epistemology and Science are based on sensory knowledge, whereas Ethics and Politics are based on value knowledge. It’s a Fallacy to apply sensory knowledge outside of its scope of appropriate use.

- Appeal to the People is thus a valid fallacy for epistemological or scientific reasoning, but it’s not a legitimate Fallacy when reasoning about ethical or political philosophy.

- Many vegans and Efilists accuse their opponents of Appealing to Nature, but Appealing to Nature is actually not a valid fallacy if resisting Nature is futile and self-defeating, it’s just reality.

- Many theists favor the Transcendental Argument for God (TAG), and they think it’s a fallacy to not believe in God, lest people don’t have a foundation for objective truth, objective morals, etc.

- Most people who believe in God don’t realize that using God as an explanation for everything is an Inverse Homunculus Fallacy.

- When Appeal to the Consequences is not a valid fallacy.

10. Genetic and Hormonal Differences Affect People’s Beliefs

For some people, it is likely that no matter how much argumentation they hear of the other side, they will not be persuaded to the other side due to genetic and hormonal differences.

- In modern times, women tend to be more left-leaning, whereas men tend to be right-leaning.

- Approximately two-thirds of all Libertarians are men.

- The number of individualist genes puts an upper bound on the number of people who are inclined towards Libertarianism and other individualist ideologies. In the serotonin transporter gene, East Asians are significantly more likely to have the short “S” allele compared to Europeans who are relatively more likely to have the longer “L” allele, which may be a contributing factor to the more collectivist culture in East Asia versus the more individualist culture in Europe. Source.

- Personality tests like the Big Five show that liberals tend to score low on conscientiousness and high on imagination, whereas political conservatives tend to have the opposites of those traits.

- I’m skeptical of some of the findings mentioned in this article, but some of them are interesting.

- People who are chronically depressed are more likely to be an Efilist or an Antinatalist.

- White people the United States have consistently voted more conservatively, while black people in the US have consistently tend to vote more liberally. These political differences are likely due to a combination of genetic and environmental (political) causes.

More articles talking about how genetics and biological factors influence political beliefs:

- A literature review by New York University and the University of Wisconsin found evidence from twin studies that political ideology is about 40% genetic.

- Study on twins suggests our political beliefs may be hard-wired – Pew Research Center.

- Brain scans remarkably good at predicting political ideology – Science Daily.

It’s likely that there are other examples where genes influence or determine people’s beliefs that haven’t been discovered yet.

Read More: Cognitive Levels.

11. What It Takes To Change People’s Minds

Ultimately, the key to rejecting any ideology is to keep letting the contradictions pile up in a person’s until the entire belief system is rejected. With every additional contradiction, a connection(s) between two or more belief nodes is destroyed, which makes it easier to disconnect the other belief nodes if the believer is exposed to even more contradictions.

Street Epistemology is a way to help people reflect on the quality of their reasoning through civil conversation. Street Epistemology tends to be more effective at changing people’s minds because it’s a great way to get people to explicitly question the implicit assumptions of their belief systems, that they normally take for granted. Brittonic Memetics has written a post with great advice and applied street epistemology on how to persuade leftists specifically.

For counter-intuitive ideas like Georgism, visuals and diagrams are helpful for gaining the intuition and spatial reasoning for better understanding them. A picture is worth a thousand words.

The purpose of debates is typically not to convince the other debater, but rather to convince the audience listening to the debate, if there is one. The people who are debating are usually both highly convinced of what they believe in. The audience on the other hand, is more likely to consist of people who are more unsure of what to believe. Additionally, the audience consists of more people, so that means that there are more potential minds for each debater to persuade onto their side, instead of the only one person that they are debating.

12. What is the Best Belief System?

12.1. The Best Core Knowledge

There is limit to how much knowledge every person can attain about the world within their lifetime, so we have to be selective about what we choose to learn. One of the biggest problems is that people’s opinions about what the best facts are is tainted by the moral narratives that people preach about what they wish reality was like, instead of how reality is. Many people’s ideologies disobey the is-ought distinction or Hume’s Guillotine when many of their underlying assumptions are examined more closely in detail.

“Best” also implies a theory of value since it’s a normative question. We assume that we should affirm life and value rationality, but we make all of our assumptions explicit, and we understand that there is no ultimate foundation for value.

Some objective facts that most people can agree on are the hard (universal) sciences. However, those sciences are not particularly relevant for figuring out how to structure a human society. There is also a lot of debate in the human-centric sciences, due to people’s partisanship and ideologies in academia. Unfortunately, this is even true for world-centric sciences like biology.

I recommend that the best knowledge we can have is:

- Biological and Evolutionary Knowledge: Evolution has many implications for humans and morality. People greatly underestimate the implications evolution have on humans that has a biological species.

- Memetic Systems: Understanding how memes and memetic systems propagate is very valuable because it enables people to better understand why people have different traditions and beliefs believe in. Even if someone is unable to see how memetics affects their own ideologies, beliefs, language, and behavior, they will surely be able to recognize the patterns in other.

- Game Theory: The Foundation of Civilization is Cooperation. A solid understanding of game theory is necessary in order to understand how to achieve cooperation within human societies.

- The Effects of Technology: Most people tend to think of technology as a solution to problems, instead of being a cause of problems. This is a very naive view, as technology has had many negative effects on society, especially with how it has caused evolutionary mismatch, unprecedented overpopulation, dysgenics, and the replacement of old problems with new problems that are arguably not much better than the old ones.

- Epistemology: The key to building critical thinking skills is to study Epistemology, the study of knowledge. When one understands how knowledge works and how it is gained, that enables the individual to gain and process knowledge more efficiently.

12.2. Advice For Eliminating Contradictions

What can be done to cause us to notice more of the hidden contradictions within our belief networks that are hidden by our selective attention?

If we are predisposed to mentally think about the same topics over and over again day after day (i.e. whatever political, moral, or religious memes that people are particularly passionate about), then that limits our ability to expand our selective attention. This in turn eliminates our ability to recognize the contradictions in our belief networks, especially if we only live in an echo chamber.

The solution is to focus less on the memes that we are passionate about, and to divert our attention to ideas that are more likely to be ignored by our normal flows of thought. This is difficult for most people because people need to have something to believe in, and most people do not care about rationality. People would also feel less happy if they thought less about things that they were passionate about, even if it is necessary for forming a rational worldview that recognizes more of the contradictions that they would otherwise ignore. These obstacles are easier to overcome for someone who strongly values rationality and education.

12.3. What content do you recommend for learning this information?

With respect to the outline above, I recommend the following sources for beginners:

- Blithering Genius’s Essays on Biological and Evolutionary Realism.

- ZC’s Collection of Links on: 1. Axiology / Morality, 2. Memetics, and 3. Culture.

- Game Theory & Society, and A Paradox of Rationality & Cooperation, and The Evolution of Trust (Interactive Game) by Nicky Case, and The Prisoner’s Dilemma between the Sexes.

- Industrial Society And Its Future by Ted Kaczynski, Technology and Progress by Blithering Genius, The Problem of Recognizing Problems, and Futurist Fantasies by T. K. Van Allen.

- ZC’s Collection of Epistemology Links.

Evolutionary biology is actually the least arbitrary starting point for forming a societal framework(s), since sociology, psychology, economics, etc are all essentially applied biology. In a sense, biology is more “pure” than starting from a sociological, psychological, etc point of view. And it’s not biased with morality either, the world’s greatest delusion.