Reasoning Skills

Logic, Premises, Heuristics, Fallacies, Biases, and Proofs

1. Summary Of Formal Logic

We recommend reading forall x: Calgary for an introduction to classical logic.

- Logical Operations, Logic Symbols, Differences and Contexts with Natural Languages like English.

- Truth Tables, Order of Operations.

- Logical Forms.

- Conditions, Converses, Inverses, Contrapositives, English Conditional Sentences.

- Logical Quantifiers.

- DeMorgan’s Laws, DeMorgan’s Laws for Quantifiers.

- Tautologies, Contradictions.

- Inference Rules, Latin Terminology.

- Invalid Logical Arguments.

- Usage of NAND and NOR to create all other logical statements.

2. Distinguishing Between Different Types Of Contradictions

- An Ordinary Contradiction

- A Performative Contradiction

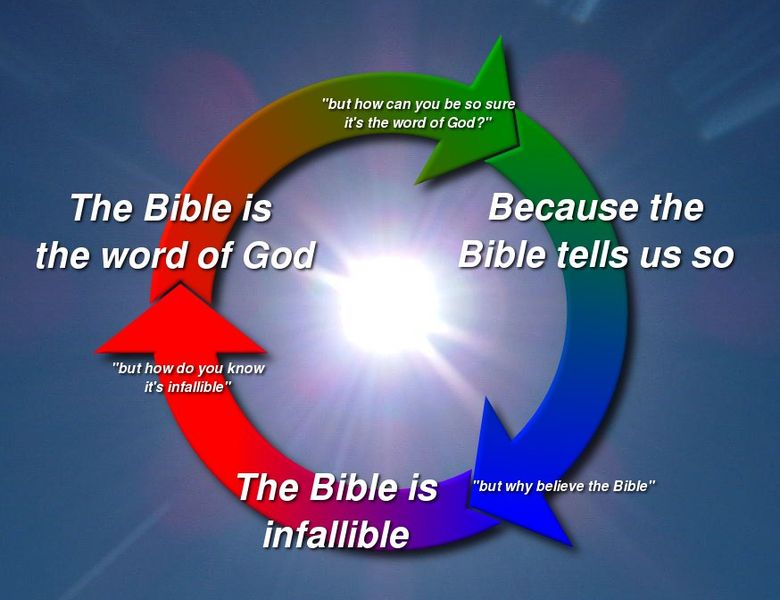

- Circular Reasoning (a circle of contradictions)

3. Properties of Premises

We should first note that distinguishing between necessary and sufficient conditions actually isn’t used a lot in critical thinking, based on the very broad knowledge that I’ve attained within my lifetime. But these properties are covered here nonetheless since they’re common in other introductions to logic.

3.1. Necessary Condition

- Q is true only if P is true.

- ’P is necessary for Q because P being true is needed for Q to be true’

3.2. Sufficient Condition

- If R is true, then S is true.

- ’R is sufficient for S because R is all you need to get S’

- ’R is enough to get S’

3.3. Conditions Possibilities

Any Condition Has Four Possibilities:

- Necessary & Sufficient [1]

- Not Necessary & Sufficient [2]

- Necessary & Not Sufficient [3]

- Not Necessary & Not Sufficient [4]

| Necessary | Not Necessary | |

|---|---|---|

| Sufficient | [1] | [2] |

| Not Sufficient | [3] | [4] |

3.4. Intrinsic and Instrumental Value

3.5. Normative And Descriptive Claims

3.5.1. Descriptive Claims

- Are statements that describe something.

- They express an understanding of how something is or could be.

- They don’t make evaluations.

3.5.2. Normative Claims

- Are statements that express an evaluation or judgment.

- Are often implicit.

- Relative to a standard or ideal.

- Words that are commonly associated with normative claims are: should, shouldn’t, right, wrong, and many more.

4. Statistical Reasoning Skills

4.1. Bayes’ Theorem

4.2. Correlation & Causation

- Positive Correlations: When events frequently occur together.

- Negative Correlations: The occurrence of one event makes it unlikely that the other event will not occur.

- Correlation ≠ Causation: Alternative explanations include common/alternative causes and coincidences.

Facts about Correlation & Causation

- Correlation is correlated with causation.

- Causation causes correlation.

Read More: Why Correlation Usually ≠ Causation - Gwern.

4.3. The Conjunction Fallacy

4.4. Type I And Type II Errors

Every hypothesis test has four possible outcomes:

| Decision | H0 is True | H0 is False |

|---|---|---|

| Fail To Reject H0; Reject HA | Correct | Type II Error |

| Reject H0; Choose HA | Type I Error | Correct |

- Type I Errors (False Positives) occur if the Null Hypothesis is rejected when it is actually true.

- A False Positive is a result that wrongly indicates that a given condition exists when it actually doesn’t (the positive result is actually false).

- Type II Errors (False Negatives) occur if we fail to reject the null hypothesis when it is actually false.

- A False Negative is a result that wrongly indicates that a given condition does not hold when it actually does (the negative result is actually false).

- ’Fail to Reject’ the Null Hypothesis is used instead of ’accept’ because there is still a small probability (β) that it could be wrong.

- α (level of significance) is the probability of making a Type I Error, if the Null Hypothesis is true.

- The reason why this is the case is because α is a probability that makes up one tail of the curve, the other, or both simultaneously.

- If the null hypothesis is true, it’s still possible to get values for the mean that are located in those tails, but i

Alternate Table In Terms Of True/False Positives/Negatives

Every hypothesis test has four possible outcomes:

| Decision | H0 is True | H0 is False |

|---|---|---|

| Fail To Reject H0; Reject HA | True Negative | False Negative |

| Reject H0; Choose HA | False Positive | True Positive |

4.5. Regression to the Mean

The phrase “regression to the mean” is used to describe two different things. The first is a statistical effect: that outliers tend, on average, to score closer to the mean of a distribution when you test them a second time (or when you test their children, or their parents). This occurs because any departure from the mean is partly attributable to chance or error. If you select the outliers and re-test them, then the chance factor will be zero on average (for the second test). The chance of significant departure from the mean was positive on average for the first test, because you chose outliers. In this case, “regression to the mean” is an artifact of sampling.

For example: Imagine that you have a sample made up of fathers and sons and measured their IQs. For simplicity, we will ignore the effects of admixture from the moms (which should even out anyways) and the effect of increasing genetic load/dysgenics. If we take the 50% highest scoring dads and look at the scores of their sons, then we will observe a regression to the mean: The average IQ scores of the sons will be a bit lower than their fathers’ IQ scores. But then we could do the opposite: Select the 50% highest scoring sons and then look at their dads’ IQ scores. We will also observe a regression to the mean. This is a pretty ironclad way to make the point that it is an observational effect, not a genetic one. As another example, the same phenomenon would be observed if one picked the lower IQ people out of a sample population: their children should be expected to have higher IQs than their parents, due to Regression to the Mean. It works both ways.

The second way that regression to the mean is used (by Gregory Clark, for instance) describes the tendency to return to the average of the population at large over generations, due to admixture. So, over 10 generations, the descendants of exceptional people, in good or bad ways, are likely to become more similar to normal people. This is a slow process because people self-segregate in plenty of ways, but it is inexorable because no society is perfectly segregated. The population that exceptional people return to the mean of is that which they mix with. So, if you predict that your descendants will only mix with white people, you can predict that they will return to the mean of white people. If you predict that your descendants will mix with society at large (a reasonable assumption in a Western country), you can predict that they will return to the mean of the population as a whole. I propose to reserve the phrase “regression to the mean” for the sampling effect, and “admixture towards the mean” for the mixing effect.

– Felix, Brittonic Memetics

Blithering Genius has also written an article explaining Regression to the Mean. It’s written differently of course, but some people might find it helpful to read it.

How does regression to the mean affect IQ scores (and intelligence)?

This depends on whether we’re talking about regression to the mean, the statistical phenomenon, or admixture towards the mean, the mixing phenomenon. If we’re talking about regression to the mean, then there are ways to remove the effects of luck and sampling bias from data, such as testing the IQ scores again. Sampling bias isn’t likely to persist in IQ scores, in the long run.

If we’re talking about admixture towards the mean, then the IQ scores of a person’s descendants will mostly depend on the population that that person and their descendants are mixing with. Likewise, if two races mix with each other, then the IQ of the descendants will depend on the average IQs of the ancestral populations, how many people were mating from each race, and how many descendants each race had.

5. Heuristics

5.1. The Philosophical Razors

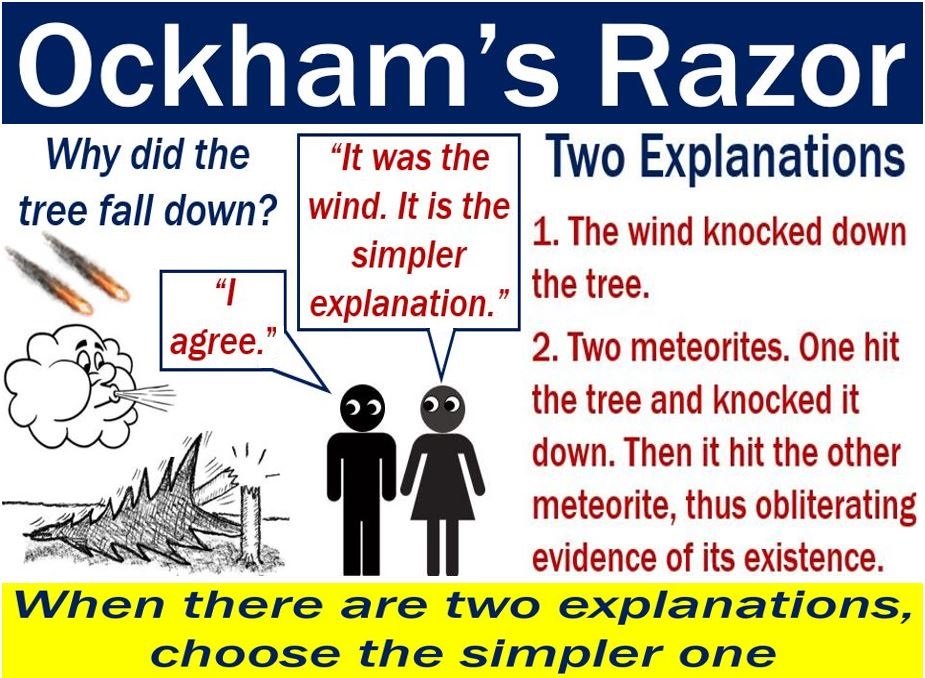

In philosophy, a razor is a principle or rule of thumb that allows one to eliminate (“shave off”) unlikely explanations for a phenomenon, or avoid unnecessary actions. Philosophical wazors are a type of heuristic that get applied to knowledge claims. The razors include:

| Razor Name | Principle | Explanation |

|---|---|---|

| Occam’s Razor | Parsimony / Simplicity | Simpler explanations are more likely to be correct; avoid unnecessary or improbable assumptions. |

| Hanlon’s Razor | Attribute Stupidity over malice | Never attribute to malice that which can be adequately explained by stupidity. |

| Hitchens’ Razor | Dismiss assertions without evidence | What can be asserted without evidence can be dismissed without evidence. |

| Hume’s Guillotine | Is / Ought Distinction | What ought to be cannot be deduced from what is. |

| Alder’s Razor | Empirical / Experimentable | If something cannot be settled by experiment or observation, then it is not worthy of debate. |

| Sagan Standard | Evidence | Extraordinary claims require extraordinary evidence. |

| Popper’s Falsifiability Principle | Falsifiability | For a theory to be considered scientific, it must be falsifiable. |

| Grice’s Razor | Implicature Before Semantics | As a principle of parsimony, conversational implications are to be preferred over semantic context for linguistic explanations. |

- Occam’s Razor: Simpler explanations are more likely to be correct; avoid unnecessary or improbable assumptions.

- Hanlon’s Razor: Never attribute to malice that which can be adequately explained by stupidity.

- Hitchens’ Razor: What can be asserted without evidence can be dismissed without evidence.

- Hume’s Guillotine: What ought to be cannot be deduced from what is. “If the cause, assigned for any effect, be not sufficient to produce it, we must either reject that cause, or add to it such qualities as will give it a just proportion to the effect.”

- Alder’s Razor: If something cannot be settled by experiment or observation, then it is not worthy of debate. I.e. Only empirical claims are worth debating.

- Sagan Standard: Extraordinary claims require extraordinary evidence.

- Popper’s Falsifiability Principle: For a theory to be considered scientific, it must be falsifiable.

- Grice’s Razor: As a principle of parsimony, conversational implications are to be preferred over semantic context for linguistic explanations.

The primary goals of the philosophical razors are to figure out what can be quickly and summarily dismissed. The rationale behind this is to save time by not wasting it on things that are probably false and/or will lead towards dead-ends.

This rationale can be seen as a subcategory of rational ignorance. Although there might be knowledge to be gained by fully understanding and evaluating something that can be dismissed by a philosophical razor in greater depth, the costs of understanding the claims and arguments in question would exceed any benefits gained by understanding and evaluating those arguments. Most of the knowledge that would be gained from exploring the razor-dismissed arguments in greater depth would likely consist of understanding why the arguments are wrong, but this in time in greater detail, in comparison to why they can be summarily dismissed by the philosophical razors.

Occam’s Razor is often applicable when analytic statements are better explanations than synthetic statements. When it comes to quantifying or ranking simplicity for applying Occam’s razor, simplicity could also be measured as which theory or belief require the fewest propositions in order to make its conclusion. Conclusions that are made on a definitional basis are more likely to be simple, if they’re applicable.

5.2. Heuristics For Solving Problems

The book How to Solve It by George Polya contains a dictionary-style set of heuristics, many of which have to do with generating a more accessible problem. For example:

| Heuristic | Informal Description | Formal analogue |

| Analogy | Can you find a problem analogous to your problem and solve that? | Map |

| Auxiliary Elements | Can you add some new element to your problem to get closer to a solution? | Extension |

| Generalization | Can you find a problem more general than your problem? | Generalization |

| Induction | Can you solve your problem by deriving a generalization from some examples? | Induction |

| Variation of the Problem | Can you change your problem to create a new problem(s) whose solution(s) will help you solve your original? | Search |

| Auxiliary Problem | Can you find a subproblem or side problem whose solution will help you solve your problem? | Subgoal |

| Use a related solved problem | Can you find a problem related to yours that has already been solved and use that to solve your problem? | Pattern Recognition / Matching / Reduction |

| Specialization | Can you find a problem more specialized? | Specialization |

| Decomposing and Recombining | Can you decompose the problem and “recombine its elements in some new manner”? | Divide and conquer |

| Working backward | Can you start with the goal and work backwards to something you already know? | Backward chaining |

| Draw a Figure | Can you draw a picture of the problem? | Diagrammatic Reasoning |

Other Heuristics:

5.3. Prioritization Of Thinking In Philosophy

- Putting “What Is” before “What Ought”.

- Putting “How?” before “Why?”.

5.3.1. The Sequels To The Mirror Test

Most homo sapiens can pass the mirror test, but a majority of them cannot pass the following sequels:

- Recognizing that supernatural deity(ies) don’t exist (their existence is false).

- Recognizing the non-existence of free will.

- Recognizing the non-existence of an ontology for “objective morality”.

- Recognizing the negative effects of private landownership (the “Economic Mirror Test”).

6. Rational Ignorance

6.1. The Rationale of Rational Ignorance

Wikipedia: Rational Ignorance.

Given the vastness of reality and all there is to know, there is only so much that we humans can learn within our finite lifespans. That said, one of the first steps someone should make in philosophy/epistemology is to decide which concepts they should learn and which ones they should pursue rational ignorance towards. Then they must order the importance of all they wish to learn and learn the most important concepts first.

If someone chooses to pursue rational ignorance, then they typically have to rely on ethos. If they don’t know how something works, then they have to rely on someone who does know how it works. However, ethos by itself is not a valid argument, which makes relying on other more undesirable and further emphasizes the importance of the virtue of independence.

Philosophical Razors and Heuristics enable us to utilize the concept of Rational Ignorance more efficiently.

7. Fallacies

- Formal fallacies are defects in the logical forms of arguments, not the content.

- Informal fallacies are defects in the content of arguments, not the logical forms. These occur when the premises do not support the conclusion.

- There isn’t some precise criterion that separates one fallacy from another. The more important distinction is whether the person is addressing the ideas or not, but it is helpful to give names to the informal fallacies so that the reasoning errors can be more easily identified and corrected.

7.1. Ad Hominem Fallacies

Ad Hominem Fallacies are fallacies that attack the person making the argument instead of the argument itself.

- Abusive Ad Hominem: Attacks the speaker directly.

- Guilt By Association: Whenever one tries to argue against a certain view by pointing out that some unsavory person is likely to have agreed with it. Example: Nazis drank water, but that doesn’t mean that water is bad.

- Genetic Fallacy: Condemns the origin of a claim instead of the claim itself. Alternatively, the origins of a claim may be used to wrongly support the claim instead of giving valid reasons to support it instead.

- Circumstantial: Claims that the speaker is only advancing the argument to advance their own interests (attacking the speaker’s circumstances instead).

- Tu Quoque: Accuses a person of acting in a manner that contradicts some position that they support, and concludes that their view is worthless. Although pointing out hypocrisy (tu quoque) and conflicts of interest (circumstantial ad hominems) don’t attack the arguments directly, they are still worth pointing out. If the opponent can’t defend their apparent hypocrisy, then they have a contradiction. And if they have a conflict of interest, then the argument should probably be evaluated by someone with no conflict.

7.2. Straw Man and Hollow Man

A Straw Man Fallacy is when a debater refutes an argument different from the one actually under discussion, while not recognizing or acknowledging the distinction. Straw Man Fallacies are common because ideologues often don’t make honest efforts to understand their opponents.

Another reason is that it’s common for arguments made by a person or group of people to be associated by everybody who someone else might associate with that person or group of people, even if they all have different reasoning for their positions (or even different positions, that only seem similar to outsiders). To make it easier to explain such scenarios, we shall coin a neologism. An N/A Argument is when someone makes an argument against their opponent, without realizing that the argument would apply to people associated or with similar positions to the opponent, but not the opponent himself.

The inverse of the Strawman Fallacy, is called the “Hollow Man Fallacy”, and is sometimes called the “Motte And Bailey Fallacy”. The Hollow Man Fallacy occurs when someone doesn’t present a well-defined position that can be critiqued, or when they don’t present their true position or beliefs.

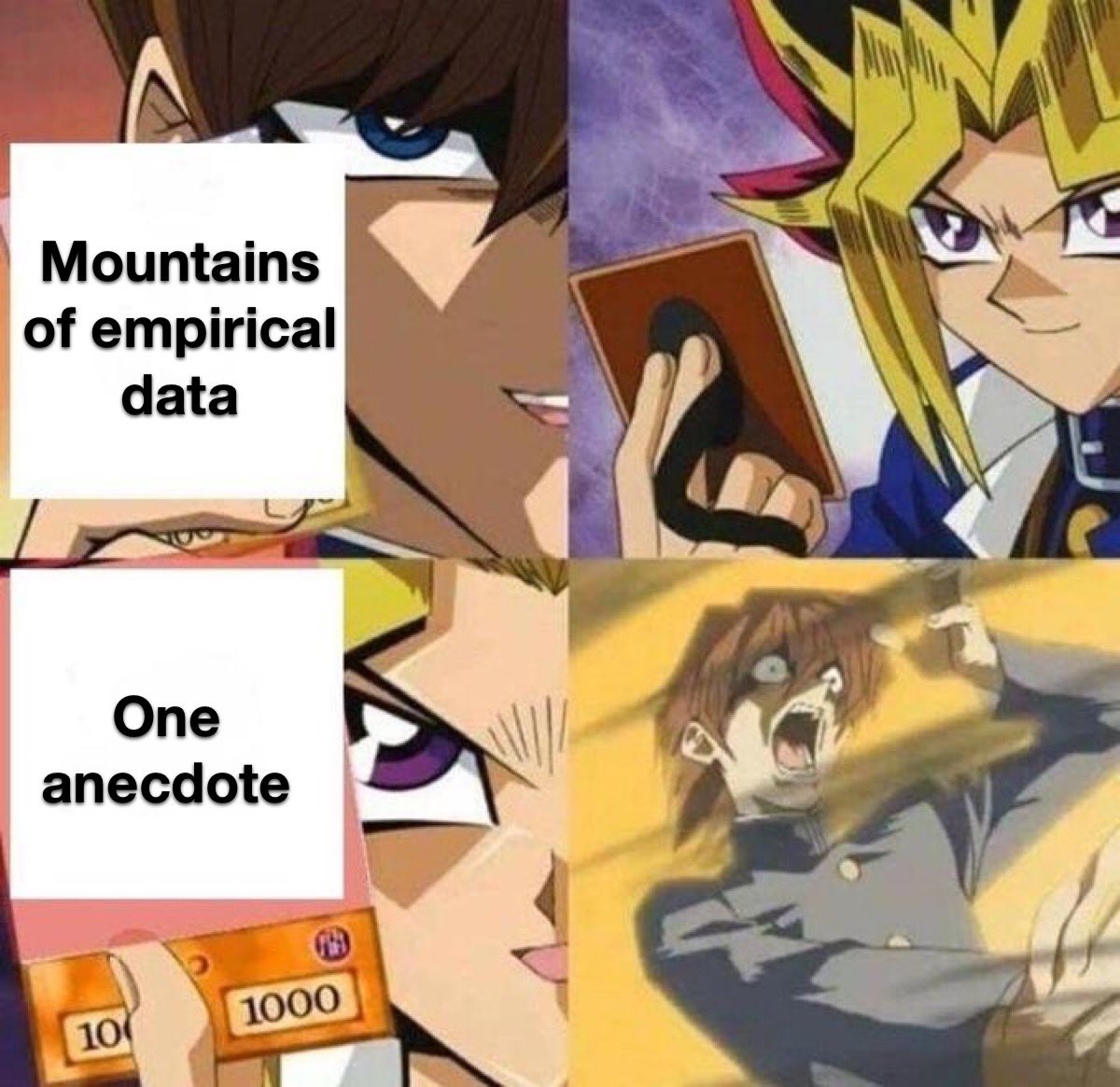

7.3. Anecdotal

7.4. Slippery Slope

Once one event occurs, other related events will follow, and this will eventually lead to undesirable consequences. Examples of the Slippery Slope Fallacy in Politics:

- Gay Marriage will lead to polygamy, incest, and beastiality

- First someone might start doing tobacco, then they’re start doing cannabis, and then before they know it, they’re being doing methadone too.

- Today’s jay walker and litterer will be tomorrow’s thief and window breaker.

- If we let one Nazi speak freely, then soon we’ll have a second Holocaust.

- Socialism is just a gateway into communism.

Note: Arguing against gun control laws and more government regulation is not a slippery slope fallacy because more gun control laws and more government regulations are still being proposed. For many leftists, the US will never have enough gun control unless it completely bans guns.

7.5. False Equivocation

Analogies are a way of expressing perceived patterns. Unfortunately, analogies are also very susceptible to false equivocation fallacies, especially when people talk about politics and other things that they don’t understand very well. Analogies and false equivocation fallacies are strongly affected by the Sapir-Whorf Effect. People often use analogies without checking (or even realizing) that all the necessary conditions for a valid comparison are satisfied.

7.5.1. Category Errors

7.5.2. Composition And Division Fallacies

- The Fallacy of Composition arises when we assume that the whole has the same properties as its parts. It is an informal fallacy.

- The Fallacy of Division arises when we assume that the parts of some whole have the same properties as the whole. It is an informal fallacy, and it is the opposite of the Fallacy of Composition.

7.6. Begging the Question

It can be particularly difficult to catch circular reasoning fallacies since all the statements in the fallacy give coherence to each other, albeit in an illegitimate way.

When trying to understand the why the logical form of circular reasoning is invalid, recall that a false statement implying a chain of false statements does not generate a true statement. So although F -> F is T on the truth table when evaluating conditionals, true statements cannot be generated from false statements when making arguments.

7.7. Appeal to Consequences

The Appeal to Consequences Fallacy occurs when someone assumes that the conclusion is bad, without honestly assessing and justifying whether the conclusion is really bad or not. Arguments that appeal to the consequences beg the question by appealing to emotion. Examples:

- “If God doesn’t exist, then life is meaningless”.

- “If people believe in racial differences, then people will become racist”.

- “If abortion is a form of eugenics, then abortion is bad”.

- Abortion -> Eugenics

- Eugenics is bad.

_ _ _ _ _ _ _ _ _ _ _ _ _

Conclusion: Abortion is bad (because it entails eugenics).

All appeal to the consequences fallacies can be written as conditional statements. In all of these conditional statements, the hypotheses are correct, but the statements fail to justify their conclusions. Hence, the conclusion made by the entire argument is false.

A person is not appealing to the consequences when they’re saying that we should do this or that, and everybody agrees on the conclusion. Consequentialist Ethics are not based on a fallacy when they are not appealing to a subjective point of view.

7.8. Pascal’s Wager

Pascal’s Wager involves multiple fallacies:

- Assuming that there could be no possible benefit to Atheism.

- There could only be one possible type of “God”.

- Appeal to Consequences?

Instead of favoring Pascal’s Wager, I propose the Atheist’s Wager: You should live a good life and be a nice person, but leave religion alone. If God is loving and kind, he will forgive you for not believing in him and reward you in the afterlife. If God punishes you despite having been a good person all your life, then God is unjust and you shouldn’t worship him.

7.9. Pascal’s Mugging

See: Wikipedia: Pascal’s Mugging.

See: Pascal’s Mugging with respect to AGI Safety - Robert Miles.

To summarize the links above, Pascal’s Mugging arguments often make fallacies similar to the kind made in Pascal’s Wager arguments. Pascal’s Mugging arguments can also one-sided, ideological, and/or irrational, particularly if:

- The probability of the events they’re concerned with are impossible.

- The supposed benefits of being concerned are non-existent, misunderstood, or fallacious. and/or

- The supposed disadvantages to not being concerned are non-existent, misunderstood, or fallacious.

However, arguments about minding the utility in considering or preparing for something devastating are valid if the consequences are conceivable. Actions that have high utility with low probability are very common in nature. For many species, living to adulthood is low probability, but if they make it, they could have a million offspring. For an elephant seal male, becoming a beach master is low probability and high utility. It seems paradoxical to some people that a behavior can be adaptive if it fails in most cases. e.g. a hyper-aggressive male strategy could fail in most cases, but succeed enough that it is selected for. – Blithering Genius

Arguments That May Use Pascal’s Mugging:

- AGI Safety

- Denying Racial Differences

- Overpopulation

- Was The Collapse Of The World Trade Center A Controlled Demolition?

- Any Others?

If one is able to clearly prove that an undesirable event is guaranteed to have negative consequences and is likely to occur, then arguing to take action against such an event does not qualify as a Pascal’s Mugging argument, even if opponents may label it as one (e.g. eventual overpopulation).

Regarding AGI misalignment, AGI is to the sand, as raising a child and all other problems are to rocks. The fear of AGI kind of follows Pascal’s Mugging, which rationalizes focusing on AGI misalignment, for unreasonable reasons. You could have a child or country that grow up to be good, evil, strong, weak, etc. So, you don’t need AI to think about these philosophical issues. But there’s no problem in worrying about these problems in AI, as long as they can relate to other issues, and would still be relevant, even if AI-misalignments don’t happen.

7.10. Appeal to the People

Establishes the truth of some claim P on the basis that a lot of people believe P to be true.

Note: It’s not possible to make an Appeal to the People Fallacy when dealing with emotional/value knowledge, since that knowledge is supposed to be subjective and can be based on popular consensus.

7.12. The Vast Literature Fallacy

The Vast Literature Fallacy is related to the Appeal to Authority Fallacy, but it’s difficult since it focuses more on an insistence that people have to read large volumes of content and materials to truly understand (and be inclined to accept) what they are saying.

The vast literature fallacy is a denial of the limited time and ability of people to acquire knowledge as subjects, hence it ignores that the concept of Rational Ignorance.

7.13. The Mechanistic Fallacy

The mechanistic fallacy is where someone describes how something works as a justification that something works, rather than providing evidence to prove that something works, as described. Describing how something works is not evidence that it works. People can only describe how they think something works, or what they want people to believe regarding how something works.

When the mechanistic fallacy is employed, the subject or process is described incorrectly. There are thus at least two fallacies involved whenever the mechanistic fallacy is used. The mechanistic fallacy usually involves incorrectly prioritizing a weaker form of evidence over a stronger form of evidence, like statistical data.

The mechanistic fallacy commonly appears when justifying fad diets, pseudoscience, and conspiracy theories. As another example, every proponent of every incorrect economic theory makes the mechanistic fallacy. They all describe how they think money, interest rates, and boom-and-bust cycles work. However, there is little evidence to prove that their theories are correct. If or whenever any of their theories are implemented, what actually happens does not work the way they describe it.

8. Cognitive Biases

Cognitive biases are hard to use effectively. Because they are informative priors in some cases. And in common harmful cases, one will have already learned better. It’s always easiest to see how someone else is sadly falling prey to confirmation bias, but not for oneself.

For a more complete and more thorough listing and explanation beyond what little is shown here, see: Wikipedia: List of Cognitive Biases.

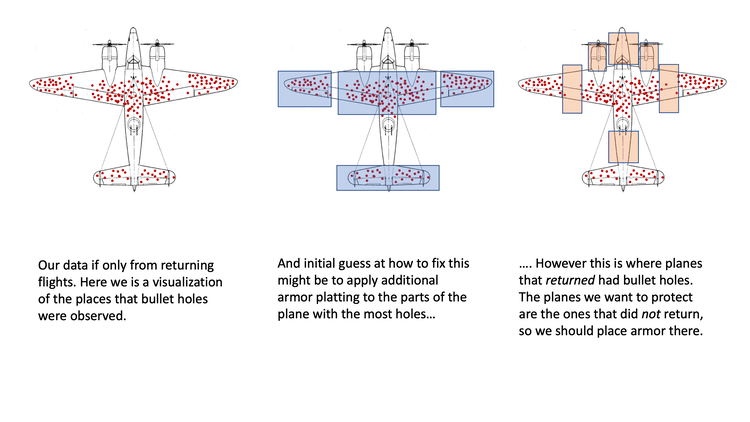

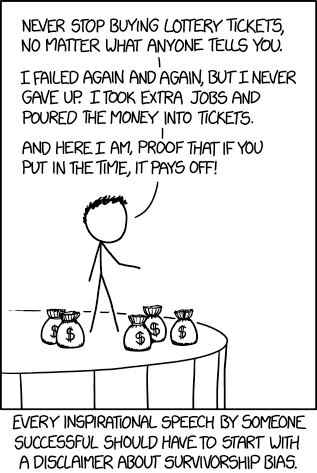

8.1. Survivorship Bias

8.2. Frequency Illusion

8.3. Hindsight Bias

8.4. A Secular Explanation Of The Law Of Attraction

The statistical explanation of miracles: Littlewood’s Law.

Wikipedia: The Law of Attraction.

To get something, two things must be true:

- The thing must exist.

- You must see it.

The Law of Attraction obviously can’t change [1], but if [1] is already true and you aren’t looking for it, you’ll still miss it. So, by making you look for it and fixing [2], the Law of Attraction “seems like” it made [1] happen as well.

8.5. Biased Factor Attribution

Biased Factor Attribution is a cognitive bias where a person insists that only one of the causes to a phenomenon should be blamed, and that other causes are irrelevant or impossible. In reality, the phenomenon has multiple causes that are just as responsible (perhaps even more so), not just one. If someone cannot acknowledge that other causes helped contribute to the effect in question, then this probably indicates that they have motivated reasoning and/or confirmation bias.

Examples of Biased Factor Attribution:

- Cornucopians insist that overpopulation can only ever caused by not using resources efficiently enough, and is never caused by population growth and ecological overshoot. This is naive.

- People might blame natural phenomena and/or ecological problems on climate change, instead of considering that other phenomena could’ve been responsible instead.

- Most Georgists insist that expensive housing prices can only be caused by not using land efficiently enough. Even if immigration causes the demand for housing to increase while the supply stays the same, they insist that immigration is not a problem because land value tax would and entice landlords to build as much housing as there is a demand for. This is naive, because: 1. increasing the demand for housing still increases the price (whether it’s caused by immigration or not), 2. population growth can cause other problems, and 3. not all humans are created equally.

- Libertarians insist that markets can never fail. They insist that all economic failures are caused completely by government interference in the economy. This is naive.

- Likewise, many people have biased opinions about what caused the Great Depression and what caused the Great Recession. In particular, many people will blame the least favorite political party for causing these economic recessions, while insisting that their favored party had nothing to do with it.

- When people argue over what ended the Cold War, some people want to attribute the fall of the USSR exclusively to Reagan, whereas others attribute it exclusively to Gorbachev, without considering that multiple factors led to the fall of the USSR.

- Race denialists insist that racial disparities are only caused by environmental factors, even though genetic factors are the primary cause.

I will list other examples here, if I think of any.

I tried searching for this concept on the following Wikipedia pages, but I haven’t really found anything describing what I’m thinking about. It seems that “attribution bias” pertains only to psychology, and isn’t used to describe biases towards other phenomena in academia.

9. Question Evasion Techniques

Question Evasion Techniques are various techniques that people will use to avoid questioning their beliefs and worldview:

- Ignoring the question

- Acknowledging the question without answering it

- Questioning the question by:

- requesting clarification

- reflecting the question back to the questioner, for example saying “you tell me”

- Attacking the question by saying:

- “the question fails to address the important issue”

- “the question is hypothetical or speculative”

- “the question is based on a false premise”

- “the question is factually inaccurate”

- “the question includes a misquotation”

- “the question includes a quotation taken out of context”

- “the question is objectionable”

- “the question is based on a false alternative”

- Attacking the questioner

- Declining to answer by:

- refusing on grounds of inability

- being unwilling to answer

- saying “I can’t speak for someone else”

- deferring answer, saying “it is not possible to answer the question for the time being”

- pleading ignorance

- placing the responsibility to answer on someone else

- Attacking the questioner

- Tactical Nihilism

9.1. Tactical Nihilism

Tactical Nihilism: A bad-faith debating tactic where the debater selectively rejects commonly understood concepts, systems of classification, or terminology used by their opponent, halting any substantive debate, but supports their own viewpoints using those same concepts.

Instead of evaluating the logic of an opposing argument, the tactical nihilist will feign confusion, and attack a term used by their opponent. If the opponent, unaware of the tactic, takes the debater’s apparent confusion in good faith, the conversation is quickly derailed into long discussions where the debater will continually request more and more evidence simply to establish the term’s definition or validity, which the debater really understood in the first place. The debater will split every hair, attempt to deconstruct other words and concepts, and request more evidence. The original argument is forgotten and appears to be unaddressed by the opponent, and the debater is then able to feel victorious. Example:

Alice: White people, becoming a dwindling and hated minority in the United States, face challenges as a group and should be allowed to advocate for their interests as a race.

Bob: Race? What even is that, really? And what is white? What about Italians and the Irish? I don’t even know what you’re talking about.

Alice: Wait, you support black, Hispanic and Asian minority activism. You know what race is, and I’ve never heard you try to deny or deconstruct any of those other racial identities before. And you sure seem to know what white people are when you’re attacking them for white privilege, or when you think there are too many of them. Please stop with the tactical nihilism.

Related: Beware Isolated Demands For Rigor - Scott Alexander.

9.2. Tactical Empiricism

Tactical Empiricism is when a debater expects the opponents to supply large amounts of evidence to support their positions, while not holding themselves to the same standard of ensuring accurate, high-quality information. Tactical Empiricism is similar to Tactical Nihilism since both tactics are unfairly selective about the means of knowledge that one must use to defend their arguments.

How is tactical empiricism different from the genetic fallacy?

They are almost synonymous with each other, but tactical empiricism is a bit more broad. Genetic fallacies explicitly attack data or information based on the source of the information. Tactical Empiricism could do that as well, but it could also be when a person is disinterested in the truth, so they avoid trying to search for data that is relevant for understanding reality.

For example, when I was arguing with Electric-Gecko, he told me that there was no reason for him to be familiar with what the crime rates are for African Americans and other races in the United States, since he is a Canadian and he lives in Canada. I responded to him that this wasn’t unreasonable. I also pointed out that different races have different crime rates in Canada too (e.g. Aboriginal Canadians and African Canadians have the highest crime rates in Canada). Since he had no valid excuses for not recognizing that different races have different crime rates, I labeled this as “Tactical Empiricism”.

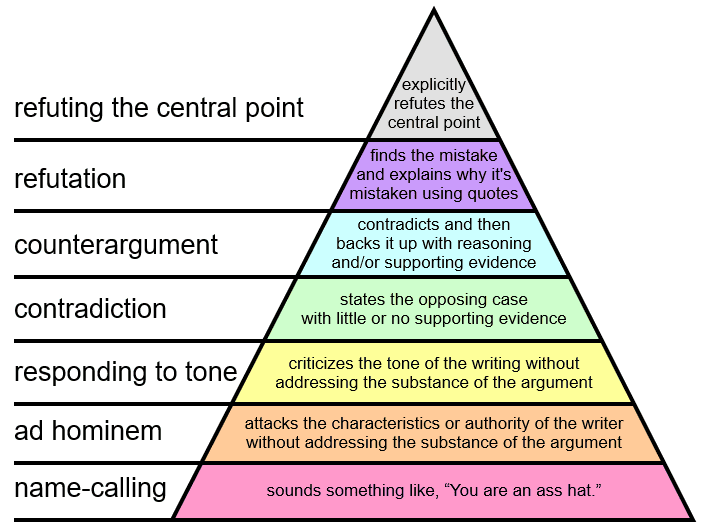

10. Graham’s Hierarchy Of Disagreement

Citation: Graham’s Hierarchy of Disagreement, under CC BY-SA 3.0, by Loudacris.

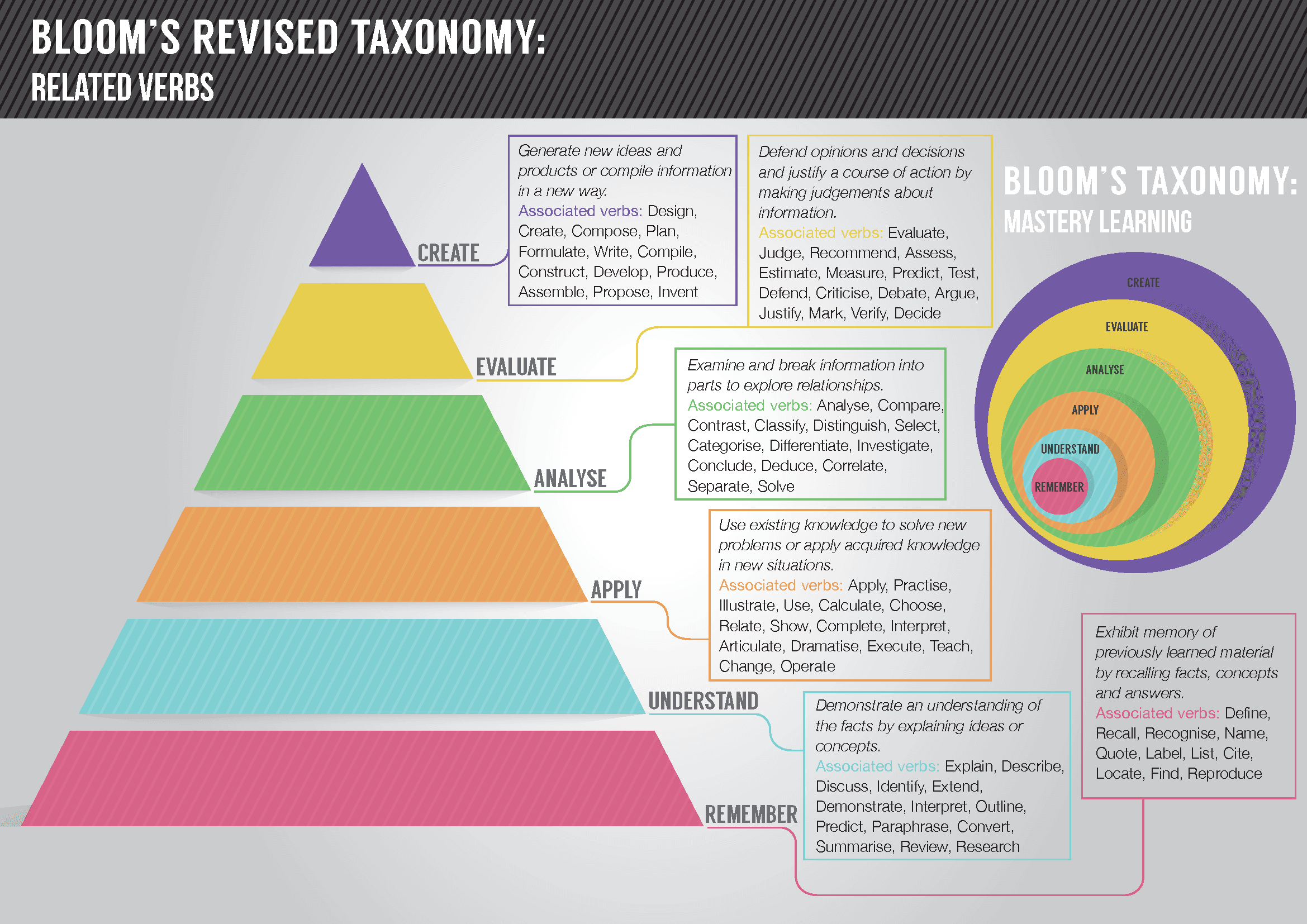

11. Bloom’s Taxonomy

12. Proofs

12.1. Introduction to Proofs

- Introduction to Axioms.

- Theorems are statements that can be proven to be true.

- Answering whether statements are provable or not is a set theory topic.

- If a statement is provable (i.e. it is theorem), we write a proof to prove it.

- If a statement is improvable, it goes to the backburner.

- A proof consists of a series of steps, each that logically follow from assumptions or previously proven statements, whose final step results in the theorem statement being proven.

- Theorems should be rewritten using precise mathematical language before they are proven. All the assumptions in the theorem’s statement and everything that is known should be written down.

- If the proof is not a direct proof, it is good practice to state what type of proof is being done.

- It is extremely helpful to have someone else look over your proof. They will often be able to critique, spot holes, or make suggestions to improve your proof, which you may have missed due to your selective attention.

12.2. Advice For Improving Proof-Writing Skills

- Expand out unfamiliar terms.

- Replace generic statements with statements about generic objects.

- Include implicit information.

- Practice makes perfect.

More Advice: Example Step by Step Proof.

12.3. Types Of Proofs

- Direct Proofs prove the truth / falsehood of a statement through a set of axioms that prove a conclusion c as a direct result of the assumption, like a conditional statement.

- In a direct proof of a conditional statement, the hypothesis p is assumed to be true and the conclusion c is proven as a direct result of the assumption.

- Proofs by Contrapositive prove conditional theorems of the form p → c by showing that the contrapositive ¬c → ¬p is true. If the hypothesis is proven false, the conditional is true.

- Proofs by Contrapositive start by assuming that the conclusion of a conditional theorem is false, then try to prove the hypothesis false.

- Sometimes it is more difficult to assume that the entire conclusion is false. In such cases, it may be sufficient to assume that only part of the conclusion is false in order to generate a contradiction.

- This is the case with proving DeMorgan’s Laws. If we assume the entire conclusion is false, then we can’t break it down any further without the statement that we are trying to prove.

- However, it is sufficient to only assume that part of the conclusion is false. As long as this generates a contradiction, that is all you need.

- This is the case with proving DeMorgan’s Laws. If we assume the entire conclusion is false, then we can’t break it down any further without the statement that we are trying to prove.

- A Proof by Contrapositive is a special case of a proof by contradiction. Proofs by contradiction are more general because they aren’t limited to conditional theorems.

- Proofs by Contradiction (AKA Indirect Proofs) start by assuming that the theorem is false and then show that some logical inconsistency arises as a result of this assumption.

- If the theorem being proven has the form p → q, then the beginning assumption is p ∧ ¬q.

- Unlike direct proofs and proofs by contrapositive, a proof by contradiction can be used to prove theorems that are not conditional statements.

- If t is the statement of the theorem, the proof by contradiction begins with the assumption ¬t and leads to a conclusion r ∧ ¬r, for some proposition r.

- Proofs by Cases prove theorems by separating the domain for the variables into different classes and giving a different proof for every class.

- Every value in the domain must be included in at least one class.

- Proofs by Induction prove theorems by using a base case, induction hypothesis, and induction step.

- Counterexamples are assignments of values to variables that prove that a universal statement is false.

- It is dangerous to generalize from a set of examples because there can always be a counterexample that was not tried.

- Therefore, proofs of universal statements are generally more reliable than proofs of exhaustion, except for when the domain is really small.

- It is dangerous to generalize from a set of examples because there can always be a counterexample that was not tried.

- Proofs of Exhaustion prove statements by checking every element of the domain. They are typically used for only small domains.

- Proofs by Exhaustion are simply akin to Proofs by Cases with 1000s of individual cases.

12.4. Tips For Proving Certain Things

One common way to show that two things are equal to each is to show that one element is greater than or equal the other element, and that it is also less than or equal to the other element. Then it must be the case that the first element is equal to the other element.

To show that there is only one unique something in a specific case, it is common to assume that there are two different somethings in that case, and then show that they are equal to each other. Then it must be the case that there is only one unique something since they are both are equal to each other.

12.4.1. Brief Semantics Notes on Quantifiers

Most (all?) natural languages would have a way to define the scope that a negative particle is applied to. In English, we could approximate this with: “It is not the case that…”

- Everyone lives on Earth.

- Everyone does not live on the Moon.

- No one lives on the Moon.

Notes

- Sentence #2 may sound weird in English, but that is actually how most natural languages would negate the translation of that sentence.

- Sentence #3 is a common feature of languages in the Standard Average European sprachbund. It uses a negative indefinite pronoun without using verbal negation.

- It is worth noting that the sentence #3 is three syllables shorter than the second one.

- Even under optimal conditions, the second sentences would still most likely be at least one syllable shorter than the first one in most cases. Its higher linguistic economy was probably one factor of several that contributed to its spread in usage.

12.5. Universal Quantifier Proof Strategies

12.5.1. To prove a universal statement (∀x.P(x))

Goal: (∀x.P(x))

- Let k be an arbitrary object.

- Goal: P(k)

There are some variations of this.

12.5.2. To prove a universal statement (∀x.P(x)) over a restricted domain

Goal: (∀x ∈ A.P (x))

- Let k be an arbitrary object.

- Assume: k ∈ A

- Goal: P(k)

12.5.3. To prove a universal statement and implication

Goal: (∀x ∈ A.P (x) → Q(x))

- Let k be an arbitrary object.

- Assume: P(k)

- Goal: Q(k)

12.6. Existential Quantifier Proof Strategies

12.6.1. To prove a statement of the form (∃x. P(x))

Goal: (∃x.P(x))

- Find a suitable c. This will usually take some thought.

- Goal: P(c)

12.6.2. To prove a statement of the form (∃x ∈ A. P(x))

Note: This is the version for statements with a restricted quantifier.

Goal: (∃x.P(x))

- Find a suitable c. This will usually take some thought.

- Goal 1: c ∈ A (this often doesn’t require much work)

- Goal 2: P(c)