Technology and Civilization

Musings on Technology Overuse, AI, Evolutionary Mismatch, and the Future

1. Influential Inventions

1.1. The Most Influential Inventions Of All Time

You never change things by fighting the existing reality. To change something, build a new model that makes the existing model obsolete. – Buckminster Fuller

The scale of technology depends on the scale of civilization, so most of these inventions couldn’t exist on an effective industrial scale without the others. Some of them also may have affected more people than others, such as the Internet vs the printing press. But there’s no way the Internet could’ve existed before the printing press, since the printing press was necessary to help create a world that could invent the Internet. Hence, it’s not really meaningful to rank and identify any “best” set of inventions, but we can identify some inventions that have been comparatively more influential than others, in terms of how they affected civilization. Furthermore, while it’s undeniable that all these inventions have affected human civilization, they each affected civilization in different ways, so it’s debatable regarding which of them have been more influential than others. Ranking any of the most important inventions in history as an ordinal list would imply a theory of value.

- Shipping Container

- Transistor

- Digital Computers

- The Internet

- Vaccines

- Printing Press

- Typewriter

- Telegraph

- Telephone

- Smartphones

- Caravel

- Steam Engine

- Wind Mills & Turbines

- Nuclear Bombs

- Nuclear Reactors

- Railroads

- Automobile

- Airplanes

- Firearm

- Fire

- Chariot

- Crop Rotation

- Haber-Bosch Process (needed for modern nitrogen-rich soil)

- Interchangeable Parts

- Cotton Gin

- Sewing Machine

- Refrigerator

- Radio

- Television

- Vacuum Cleaner

- Dishwasher

- Washing Machine

- Clothes Dryer

- Sewage Systems

1.2. The Most Influential Inventions Since The Industrial Revolution

- Vaccination

- Shipping Container

- Printing Press

- Transistors

- Computers

- Smartphones

- Databases

- Modern Kitchen Appliances

- Water Heater

- Air Conditioner

- Steam Engine

- Light Bulbs

- Photography

- Radios

- Television

- Cheap, Accessible Music

- Locomotives

- Automobiles

- 3D Printers

- Airplane Transportation

- Improvements in Medical Care and Health Treatments

- Improvements in Building and Construction

- The Internet

technological progress has slowed down towards stagnation since the 1970s. Nevertheless, Gwern has written a great list summarizing all the main improvements that have happened since then.

1.3. The Most Influential Primitive / Pre-Industrial Inventions

- Fire

- Shelter

- Clothing

- Wheel

- Agriculture

- Domesticated Animals

- Building / Hunting / Agriculture Tools

- Aquaducts

- Naval Transportation

- Clocks

- Writing Systems

- Positional Base-10 Numbering System (maybe not developed the same time period as the rest of these, but still important none the less)

1.4. The Speed Of Technological Progress

Video: 5 Machines We Could’ve Had 2,000 Years Ago If Only The Romans Tried – The Mike Stuff.

Often times, technologies don’t appear as soon as they could’ve appeared. This seems to usually occur when it simply took a while for people to think enough to conceive the new technology/inventions into existence, and/or when the technology wasn’t very important to begin with. For two modern examples, crypto-currencies and LLMs both could’ve appeared years before they actually did in our reality’s timeline. They can facilitate some things in some cases. But as it currently stands, they aren’t super essential, and it’s also possible to sufficiently live without them by relying on more primitive technologies.

It can also be a good thing when technology doesn’t progress as fast as it could. New technologies increase evolutionary mismatch, so it can be arguably better for technology to progress more slowly.

2. Technology Causes Just As Many Problems As It Solves

The problem with solving third-world problems with technology is that many simultaneous technological changes, malevolent actors, and evolutionary mismatch make it so that technology creates just as many problems (first-world problems) as it solves. In many cases, the new problems are just as bad as the old problems, and arguably worse in some cases. People tend to be more oblivious to the downsides of technology than they are to upsides. When I say “first-world problems”, I’m not just talking about minor inconveniences, I’m also referring to all the problems mentioned in the following lists.

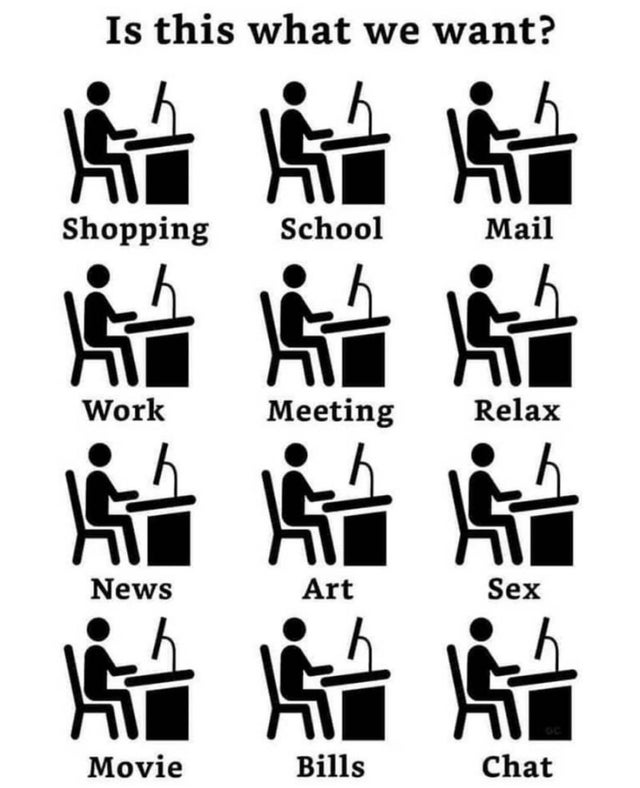

2.1. Overuse Of Technology

- less in-person social interaction

- insomnia caused by reception of blue light within human eyes

- carpal tunnel and repetitive stress injury caused by non-ergonomic keyboards

- unrealistic expectations for relationships, life, etc.

- supernormal stimuli

- light pollution

- traffic congestion

- decreased attention spans

- identity theft

- hacking

- worrying about hacking and identity theft

- worrying about personal information being collected by companies when you buy/use their products

- The increasing potential for smokescreens by AI will make it ever more difficult to distinguish between what’s fake and what’s real in the world.

- cryptocurrencies are making it easier for people to commit crimes

- The development of myopia in the modern world

- Amusing Ourselves to Death? - Gwern

- A suggested x-risk/Great Filter is the possibility of advanced entertainment technology leading to wireheading/mass sterility/population collapse and extinction.

- As media consumption patterns are highly heritable, any such effect would trigger rapid human adaptation, implying extinction is almost impossible unless immediate collapse or exponentially accelerating addictiveness.

Related: Where are the builders? - Near

2.2. Overuse Of Internet

- depression and alienation caused by social media websites

- loneliness

- increased gossip

- cancel culture

- online echo chambers

- virtue-signaling tragedy of the commons, made even worse thanks to social media

- captive subscribed brainwashed audiences

- invasive advertisements, spam, commercials, solicitors, etc.

- over-exposure of personal information

- porn addictions

- abnormal behavior caused by watching pornography

- lack of confidence in body-image

The most notorious virtue-signalers implicitly feel that #1 goal of someone’s life is to be considered a good human, not actually be a good human, although they don’t explicitly realize this.

2.3. Diseases That Are More Common In Developed Countries

- depression

- binge eating disorder

- anorexia / bulimia

- obesity

- drug addictions

- sedentary lifestyles

- heart problems

- cardiovascular problems

- myopia

2.4. Diseases That Tend To Appear In People Who Live Longer

- Dementia and Alzheimer’s

- Parkinson’s Disease

- Cancer

- Osteoporosis

- Mobility Issues

Note: I’m not saying that living long is a “bad” thing. Extending the healthy lifespan of humans would have provide huge benefits. A human being is an expensive machine to create. So, the longer it lasts, the more those costs are amortized over a productive lifespan. The point of creating this list was to support the thesis that technology replaces old problems with new problems. In this case, the new problems are better than the old problems. Many of these new problems could also be alleviated with healthier lifestyles: better diets, adequate exercise, adequate sleep, etc.

2.5. Other Problems

- rising dysgenics

- imminent overpopulation crises

- inceldom; it’s harder than ever to find a partner these days.

- deformed body parts

- materialistic consumer culture

- boring jobs

- graveyard shift jobs

- rigid scheduling

- lack of sleep caused by demanding jobs (this also occurred during the industrial revolution)

- pets’ physical disorders caused by selective breeding

- needing to euthanize pets

- time-demanding world

- other social problems

- tracking devices like Apple AirTags have been abused for stalking people, despite originally being intended to help people keep track of their potentially missing belongings.

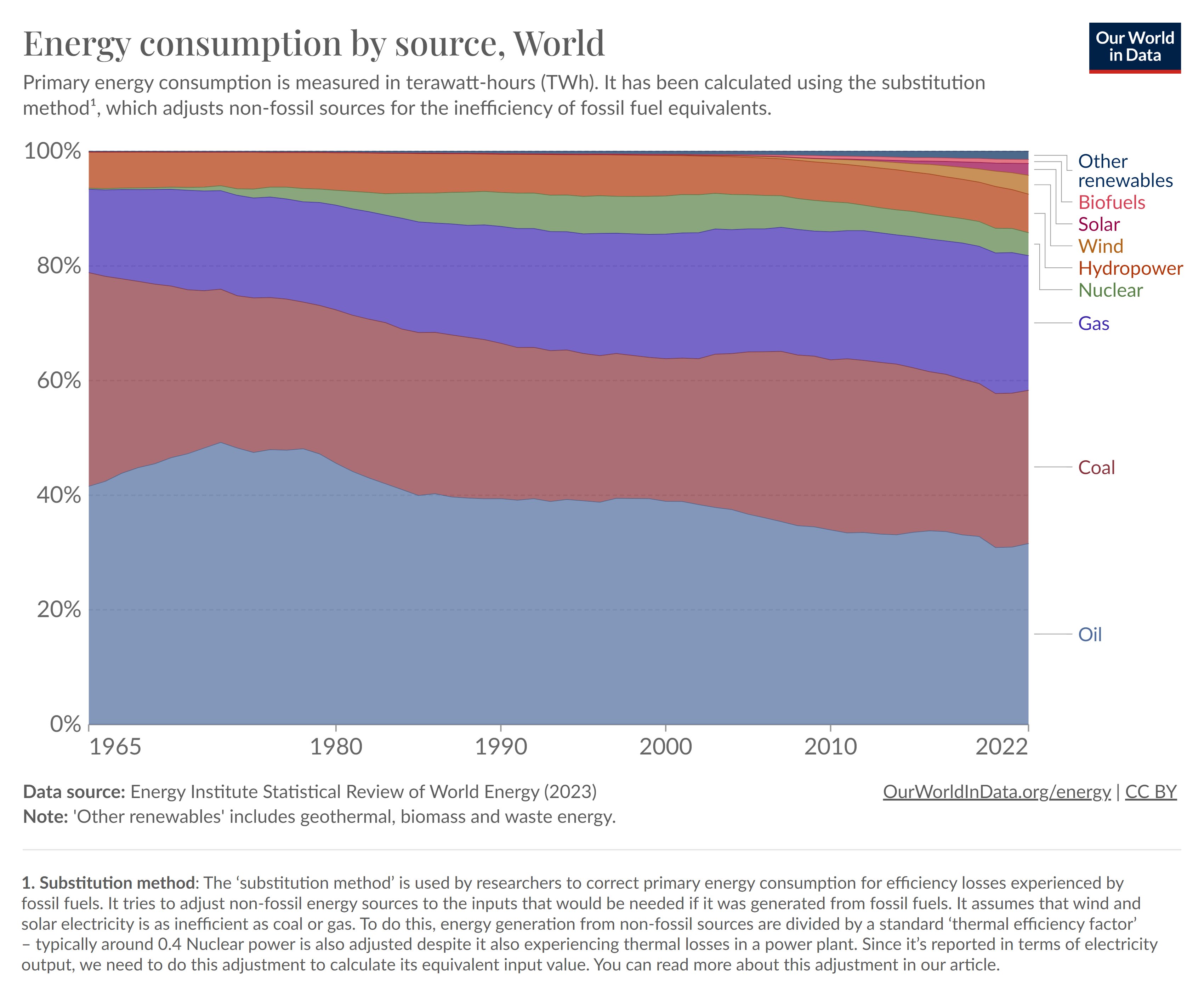

- increased carbon emissions that cause environmental problems

- increased pollution

- increased plastic pollution

- car accidents

- ever-decreasing linguistic diversity (sad if you value linguistic diversity and/or find languages to be interesting)

- increased fragility: it may only take one or a few parts in a complex technological system to fail for the entire system to fail, which is distressful for the people who depend on said system.

3. Thoughts on Artificial Intelligence

3.1. Introduction

See: Wikipedia: Artificial General Intelligence.

Regarding, Artificial General Intelligence, I personally think “general” is too ill-defined, and it seems to be a label-of-the-gaps. People use “general” when they want to describe a human-like intelligence or some other strong intelligence they can’t define properly yet. However human intelligence isn’t really general, if you become more rigorous with your definitions. All intelligence requires searching and creating a path through a space of given options. For humans, the space of options is normally controlled by our environment, the information that our senses can gather from our environment, and the knowledge that we currently have.

NOTE: I wrote most of the text below in 2024. As of November 2025, I still strongly lean towards AI skepticism. AI is still evolving, so some of my thoughts may become outdated before I can update them, but I doubt that will happen much. I recommend pivot-to-ai.com as a great source of information on why AI is over-hyped, overrated, and likely to harm society just as much as it helps it.

3.2. Thoughts on AI Safety And Alignment

At one point, I discovered Rob Mile’s AI Safety YouTube channel. I think he’s made some interesting and persuasive arguments for why we should be concerned about AI alignment. Until I started watching Rob Miles’ videos, I didn’t have any interest in the potential existential risks posed by AI. But even after watching most of his videos and looking into the topic more myself, I still think it’s unlikely that AI advancement will lead to a malevolent singularity or lead to the extinction of humanity.

- Intelligence is always constrained. Intelligence (or at least, the type of intelligence that computers have) is just the ability to search through a large space of possibilities. Problem-solving takes place within a frame that limits the space of possible solutions.

- Intelligence does not imply knowledge. Knowledge is acquired from experience. An AI program would only have the knowledge that we give it, or give it the ability to acquire. Information is only knowledge if it can be interpreted.

- Intelligence does not imply agency. Agency is the ability to act in the world: to do things. Intelligence does not confer power. A computer program can only do what we design it to do.

To summarize the summary: Intelligence is not magic. – T. K. Van Allen, Futurist Fantasies

Humans have agency because humans are organisms that emerged from evolution, which is a consequence of causality. Biological agency evolved because it was adaptive (and instrumental) for reproduction. By contrast, computers were created by beings that have agency (humans), so they will be limited to the agency of their creators, for the foreseeable future.

The concept of Instrumental Value Convergence implies that AGI could independently evolve agency, if it’s instrumental to accomplishing what the AI is programmed to do. So, if we program an AI to have a set of values that it must accomplish, and we grant the AI the power to reprogram its own source code to find better ways for accomplishing its goals, the AI could hypothetically create a new AI that could find new ways to accomplish its original goals. In essence, this would create the causal loop for computers to independently evolve their own agency, just as animals independently evolved agency to benefit their reproductive success.

However, this thought experiment runs into a few problems. Without deep understanding, an AI that edits its own source code would have to do a lot of trial and error to finally create a better version of itself, which would consume a lot of energy. Second, AGI would be created to serve humans, so it wouldn’t make much sense for humans to give AGI agency that could conflict or harm human interests. And even if an AGI was created that wanted to harm humans, wouldn’t there be other AGIs around the world whose goals are to advance human interests and protect humans against such threats? It seems that pro-human AGIs are more likely than anti-human AGIs to be created in the future.

Currently, the main bottleneck from AI managing to cross the gap from intelligence to knowledge is that AI would need experience. AI has intelligence, but it would need experience, and need to know how to interpret said experience, in order to have true knowledge. It make take decades before humans ever create an AI that has experience and knows how to interpret it. But even if that happens, how could the AI ever know how to independently use said experience for itself, if it will never be able to have the same independent agency that a human or animal does?

Nevertheless, I am worried and pessimistic about how Artificial Intelligence will affect society and change the world. In particular, I’m especially worried by how malevolent actors, criminals, and authoritarian governments like China and North Korea could use AI in ways that would harm civilization overall. The usage of AI malevolent human actors is guaranteed, whereas the evolution of a malevolent singularity is unlikely. In general, it’s just irrational from a societal perspective that technology keeps advancing, while most humans still have yet to create a rational philosophy for understanding how we should expand our agency in the world and why. In an ideal world, all further research on AGI would be paused for a few years or so until humanity sufficiently expands its rationality and comes to a consensus on what we should do for achieving the best future for all of humanity.

3.3. The Alignment Problem Is Not Unique To AI

There are different variations on the Parable of the Rocks, Pebbles, Sand, and Water. But the message is the same:

- The rocks represent your greatest priorities.

- The pebbles represent important priorities.

- The sand represents minor priorities.

- And the water represents everything else.

When prioritizing what matters to you, you must put the rocks in the jar first. They will not fit later. And so it is with the pebbles, sand, and water. They only work in that specific order. For an entrepreneur, that means putting revenue generating activity first thing in your day. If you put it off until later, you will not get around to it. But if you start with it, you’ll either have plenty of time left over for everything else you need to do, or the act of completing a “rock” project will make all other activity irrelevant.

For AGI misalignment, AGI is to the sand, as raising a child and all other problems are to rocks. The fear of AGI utilizes Pascal’s Mugging, which rationalizes focusing on AGI misalignment (for unreasonable reasons). You could have a child or country that grow up to be good or evil, strong or weak, etc. In most cases, human children won’t become “evil” unless their parents teach them to be evil.[1] The same applies to AIs that are learning how they should act or behave. Value alignment is always a problem when creating new agents, so it’s not unique to AI.

There are other relevant issues that are just as important, regardless of whether AI-misalignments happens or not. So there isn’t much point in worrying about these problems with AGI specifically.

3.4. AI and Mass Unemployment

Also see: AI is not taking our jobs anytime soon – Sebastian Jensen.

A lot of people have been attributing the rough state of the white collar job market (after 2022) to AI automation, but that’s not the case. Some graphs and basic economic theory show that it’s better explained by the Federal Reserve’s high interest rates. The higher interest rates of today’s economy have made it more expensive for companies to innovate and expand their business operations, while increasing the interest that companies can earn on savings. This has restricted the number of white collar jobs available, especially within the technology sector.

Also see: What would be the benefits of eliminating pure interest?

Since AI will probably never have independent agency, AI will never be able to replace every job that a human does. In the near future, AI may eventually be able to surpass the speed and success of the most intelligent and most well-educated humans in intelligence searching. However, it will probably take much longer (if ever) before AI ever gains the ability to acquire and interpret experience. Until then, humans will always have actual knowledge, whereas AI won’t (and may never). Hence, AI will not be able to replace white-collar jobs that require mental and conceptual experience or human oversight to do said jobs. AI would have to be guided by humans that do have said experience. If robotics gets up to speed however, then it may be possible to program robotics that can automate jobs that only require mechanical work and little to no thinking.

For example, it is unlikely that pilot jobs will be replaced by large-scale automation at any time in the future. In the far future, there may be a push toward automating the flight deck, but it’s incredibly unlikely that pilots will ever be fully removed from an aircraft with the general public traveling in it. Trains quite literally run on rails on a much smaller scale in a significantly more predictable environment, and they still have engineers and conductors to operate them on a daily basis. These observations seem to imply that some jobs and labor will always require human oversight, rather than being fully automated.

Talented people are unlikely to get replaced by AI. Especially if they know how to work with AI. The advent of AI and automation will probably cause some types of human intelligence to become less important, while other types of human intelligence will become more important.

Some developing countries have declining populations. For such countries, having a lot of AI advancement ought to be a good thing if there is a declining population and declining labor force. For instance, Japan is increasingly reliant on technology, since they have one of the oldest populations in the world.

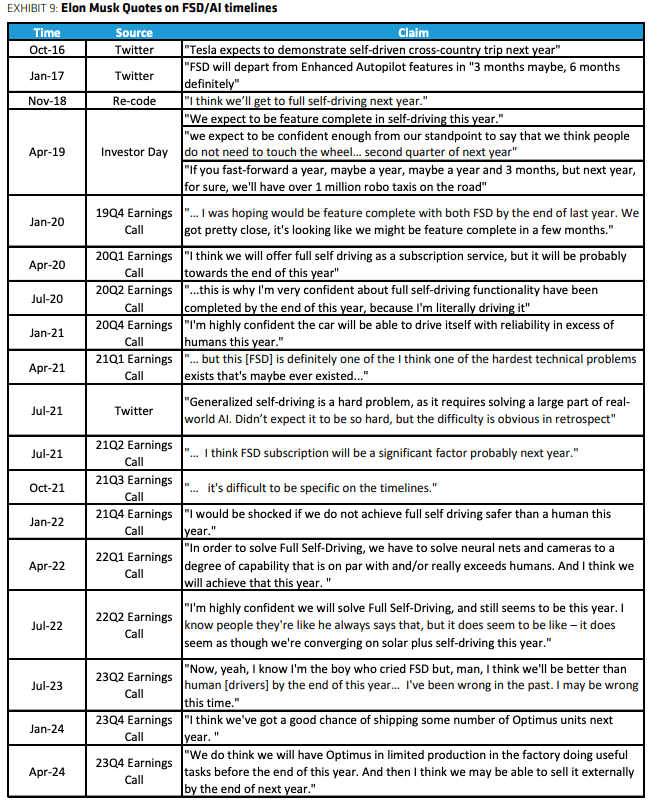

3.5. Predictions For Self-Driving Vehicles

Why Self Driving Cars are a Big Scam:

- The correct solution to saving lives on public roads would be to take bad drivers off the road, or to improve the safety of the roads. Self-Driving cars could only reduce traffic if they stop bad drivers from driving.

- Self-Driving cars will make it easier for the government, car manufacturers, and other people with power to control where people go.

- Self-driving cars rely on external sensors. If the sensors are blocked (e.g. snow, sandstorm debris, dirt) or broken somehow, then the cars will malfunction.

- Traffic is designed to control human cars, not self-driving cars. So putting driverless cars on human roads isn’t very compatible with the current roads. Even if traffic-less roads were designed for self-driving cars, that would require doubling urban infrastructure, which wouldn’t improve anything.

- Modern traffic is designed to regulate human cars, not self-driving cars. So putting driverless cars on human roads isn’t very compatible with the current roads. Even if traffic-less roads were designed for self-driving cars, that would require doubling urban infrastructure, which wouldn’t improve anything.

- Self-driving cars wouldn’t resolve most of the existing problems with cars. The better solution is to build up density in rural areas and have as many cities switch to high-speed rail as possible.

With all that being said, highway-drivers and cargo truck drivers are likely going to be the first to be replaced by self-driving vehicles. Highways are the most ideal conditions for self-driving vehicles because they have the least amounts of traffic, have more predictable road conditions, feature regular and routine drives, and since there is much less concern over removing human control from cargo transporters. It may be possible to resolve any sensory problems with highway driving via regular sensor cleaning checkpoints, and by automating self-driving vehicle to pull over and off the road when their sensors get blocked.

4. Evolutionary Mismatch

4.1. Two Paths To Solve Evolutionary Mismatch

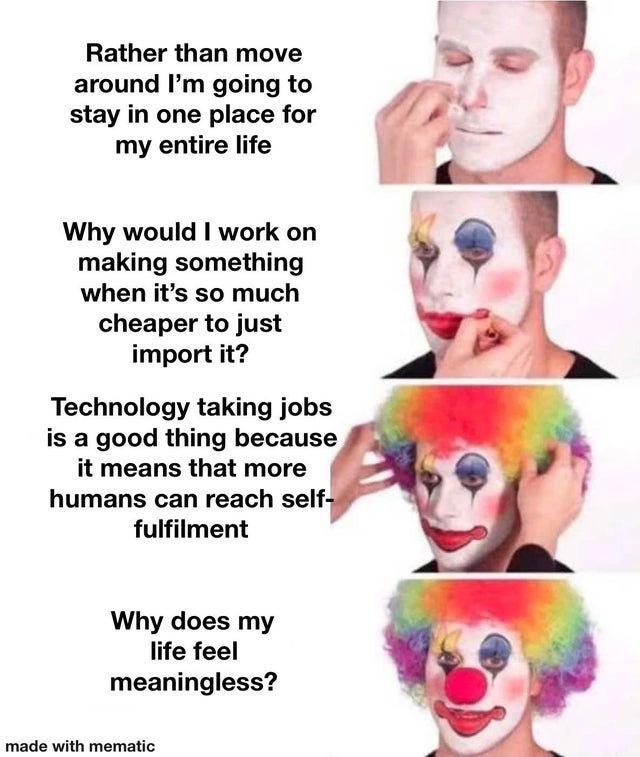

There are essentially two main paths to solving evolutionary mismatch. We can imagine two distinct worlds. The first being a Primitivist world where humans are living in an environment that is similar to the one the evolved to live in for most of human history. The second would be a technologically-advanced world (Pragmatopia) where humans are well-adapted to living in a high-tech environment. Humans may become adapted to this environment through selection via eugenics, the mass adoption of stable memetic traditions, or some combination of those factors. The Pragmatopian World is probably the closest thing that could ever be to a transhumanist utopia. But it would probably be less technologically advanced than the transhumanists are hoping for.

We can also imagine a third world where evolutionary mismatch is not resolved at all, much like the one that the Modern World is currently living in as of the early 2000s. This third world is far less pleasant than the Primitivist or the Pragmatopian Worlds because evolutionary mismatch is a major cause for social unrest, political instability, massive struggling, and the loss of purpose via the disruption of the power process. Technology, society, and culture change faster than evolution can, which is how evolutionary mismatch managed to occur worldwide within just 200 years since the Industrial Revolution.

Primitivism solves the problem of evolutionary mismatch. Transhumanism does not, nor does it even recognize it as a problem that needs to be addressed.

4.2. The Effects Of Technology On Human Evolution

When deciding if a new technology or social change should be made to the population, we should be asking if it will be worth the impact that it will have on human evolution.

- For example, vaccines are worth implementing since they don’t significantly increase the dysgenics of the population, for as long as it is possible to mass produce vaccines for all humans, and all livestock.

- On the other hand, a public healthcare system is a bad idea since it subsidizes unhealthy people and unhealthy lifestyles, whereas allowing natural selection would make it possible to get rid of dysgenic genes that make people more likely to smoke, more likely to become obese / sedentary, more likely to get cancer, etc.

Historically, the people who move society’s technology forward are going to be smarter than the average person in the population. So historical discoveries in primitive technology were made by the smartest humans. While the less intelligent rest of the population learned and followed how to use the newer, more technologically advanced technologies. Using such technologies may select for higher intelligence in some cases after a while, but probably not always, and certainly not instantaneously.

What I’m trying to get at here is that most people in a society don’t need to be particularly intelligent in order to cause rapid technological advancement. There just needs to be a sufficient number of intelligent people to drive said advancement. The end result is that it’s easy for a majority of a society’s population to end up surrounded by technology that they use. Such technology would be maladaptive to their behavior. The society would not be able to fully predict or comprehend the effects of the technology until it is too late.

Near the end of this podcast episode, Dr. Gregory Clark talks with Steven Hsu about how the turning point for when average IQ started to decrease instead of increase was around the 1850s for Europe.

4.3. Job Transitions And Evolutionary Mismatch

Theoretically, the advancement of free trade and economic development of a country could potentially have negative effects in the short-term if it caused different types of jobs to be worked in the country, compared to what the population was used to. The societal changes could interfere with the population’s ability to adapt to the modern industrial-technological world.

For example, a society that transitions from rural agricultural jobs to manufacturing and other blue-collar jobs, and then to white-collar jobs would have to stumble through the natural selection imposed by the previous stages of the society’s jobs. This could make natural selection in favor of a population that’s adapted to modernity take somewhat longer in the society. As with everything, more evidence would be required to prove that this is not just speculation.

IBM workers have had a saying for two decades “If it can be worked remotely, it can be outsourced”. This has been reality in the IT field for 20 years. Anecdotally, it’s been said that the outsourced workers has often been terrible, and most of their work has to be re-done by actual skilled employees.

Outsourcing lowers the service quality everywhere it has been done. It is a way for incompetent, so-called leadership to show how they saved money. Everyone at the company suffers as well as their customers when this is done. The company still has to maintain staff to fix all the issues outsourcing creates. Outsourcing is a terrible idea and it doesn’t work as many have expected.

4.4. The Power Process

The Power Process, as defined and explained in Industrial Society and Its Future by Ted Kaczynski

The “Power Process” described by Ted Kaczynski is most similar to the following terms that are used in Academia:

When Humanists are clamoring about wanting people to “flourish”, they don’t specifically define the term, but what they most likely mean that they want people to go through the power process. Unfortunately, Humanists don’t have the philosophical grounding to understand what the power process is, or how technology prevents people from experiencing the power process.

Transhumanists don’t have any solutions for ensuring that people are able to find purpose and meaning in their lives in the Modern World. Somehow, they believe that creating even more technology will automatically make everything better, even though it will more likely make the problem even worse by making purpose even harder to attain. On the other hand, Neo-Luddism has very easy solutions for ensuring that people will still be able to find purpose and meaning. Neo-Luddism seems in line with many absurdist and existentialist ideas.

People don’t want to use philosophical thinking to figure out their purpose. They just want to be placed on a hamster wheel and have their purpose be given to them. This used to happen before birth control existed because once a baby was born and you couldn’t do anything to stop it, you had to take care of it, and that would become your purpose. But that purpose is now gone in modernity.

4.5. Neo-Luddism / Anarcho-Primitivism

For an introduction to the philosophy and ideas of Neo-Luddism, reading Industrial Society and Its Future is a great place to start since it’s famous and reasonably short. It’s said that Ted Kaczynski got most of his philosophy and ideas on technology from The Technological Society by Jacques Ellul, but I haven’t read that book myself. From what I’ve heard, Anti-Tech Revolution: Why and How is more comprehensive and more up-to-date than Industrial Society and Its Future, but I wouldn’t recommend it for most people because it’s honestly too long for an introduction to Neo-Luddism. Kaczynski spends too many pages of the book trying to justify his utopia as the best one, but this is a fruitless effort since this there is no a priori basis for value. There are other books that people could read about on Neo-Luddism, but I don’t know which to recommend.

Read More: Neo-Luddism.

Read More: Simple Living.

|

|

|

Personally, I like Neo-Luddism more than Transhumanism. It feels more natural to me, and I know wouldn’t ever have to worry about losing a sense of purpose in life and not being able to go through the Power Process. I would rather that the world had stayed in a primitivist state for a longer period of time before starting the Industrial Revolution, if it meant that we could avoid the imminent collapse of civilization caused by humanity’s evolutionary mismatch and general ignorance.

The idea that industrial civilization limits our agency is wrong. It’s basically a twist on the Marxist idea of wage labor causing “alienation”. Kaczynski blamed industrial society for his social problems similarly to Elliot Roger, the Columbine killers, etc, and he lived on money from his parents. He failed to realize that an individual’s agency is always going to be limited by whatever society they live in. Moreover, most Neo-Luddites are probably in favor of at least some technology (e.g. vaccinations), precisely because it would expand their agency.

4.6. The Feasibility Of Achieving Neo-Luddism

What ultimately matters in this world is who has the power to enforce their rules. The probability of industrial society collapsing in the hands of revolutionaries is infinitesimal because a vast majority of people would never consider destroying the society even when presented with a strong argument to do so.

Additionally, countries or groups with better technology tend to dominate those without. Hypothetically speaking, if a country like the United States were to deindustrialize and return to a primitive state, what’s going to stop other countries like Russia or China from conquering the country? If they were to do it, they would inevitably subjugate the lives of American inhabitants and reestablish some type of techno-industrial society. Similarly, even in a nuclear apocalypse scenario, groups or individuals with better technology (such as preppers or the rich) are far more likely to survive than those without said technology.

The two things that are actually guaranteed to bring down the techno-industrial society to a more primitive state are time and natural resource depletion. As Blithering Genius has said, “collapse is forever”.

Addressing Neo-Luddite Arguments

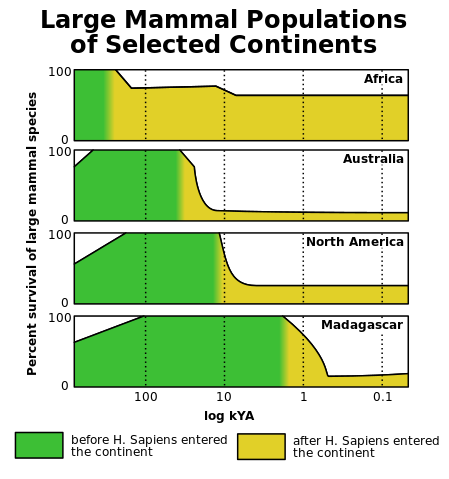

Warfare only became more prevalent after 12000 BC. Humans were (mostly) peaceful before that.

It’s probably true that humans probably fought less wars against each other before all/most of the megafauna went died off over 10k years ago. Among the large animals in North America and South America that disappeared around 13,000 years ago, there were horses, camels, ground sloths, all of the elephant species (including mastodons and mammoths), giant beavers, giant armadillos, the short-faced bear, the various saber-toothed cats, and so on. Before the megafauna died off, humans most likely would’ve been working together to hunt and kill megafauna. Once the megafauna died off, what most likely happened is that the food supply dropped, famines started to occur, and that incentivized humans to start fighting wars against each other to steal other people’s land and get food (because there were no other viable food sources, and thus no other viable choices).

Where large animals survived, they were either forest dwellers such as deer, mountain dwellers such as mountain goats and mountain sheep, and animals that had fairly recently migrated from Eurasia, such as the Bison (which were kind of aware of humans and weren’t naive, so they survived). But most of the big animals got wiped out, and the Native American population perpetrated the first great ecocide in the Americas.

If I encounter any other arguments by Primitivists, I will write rebuttals to them here.

5. Transhumanism

5.1. General Thoughts On Transhumanism

As Transhumanism is defined on Wikipedia, I have mixed opinions about it:

- I think that many technologies that are proposed by transhumanists are unrealistic, impractical, likely to have downsides, and/or not as useful as they’re hyped up to theoretically be.

- The biggest problems currently facing humanity are social problems, not technological problems.

- Transhumanism is mostly a distraction from what humanity should be focusing on.

- Most transhumanists are over-optimistic about the speed of technological development.

- Immortality is neither feasible nor desirable.

- I don’t believe it’s possible to meaningfully reduce suffering.

- I support eugenics and many genetic technologies. However, I think genetic engineering could be abused and interfere with optimal selection.

- I support protecting humanity from existential risks. I doubt AGI will be a threat to humanity.

- Technology creates just as many problems as it solves. Transhumanists rarely acknowledge this.

- The technology of the modern world has already made it harder for people to go through the power process. Even more technology will only make that even worse.

- There’s probably some room for technological improvement that will significantly improve humanity with little controversy. Besides genetic technologies, reducing existential risks, increasing sustainability, technology & infrastructure reform, I’m not entirely sure what that might be, or what it would look like.

5.2. Biological Immortality

Biological immortality is likely impossible, as explained in Sex, Death, and Complexity. The length of telomeres in chromosomes also pose another restriction on maximal lifespans:

At the end of every DNA strand in your chromosomes there is a repeated sequence called a “telomere”, which causes the strand to fold on itself and prevents it from unraveling. The telomere gets shorter each time the DNA strand is replicated. The length of the telomere limits the number of times that the cell can replicate. (This is a slight simplification.) We could probably use genetic engineering to turn on the gene that produces telomerase in all of our cells. That enzyme adds telomere sequences to the end of the DNA strands, so it allows cells to replicate indefinitely by mitosis. But that would probably increase the likelihood of cancer. It probably wouldn’t extend your lifespan by much, and it might even shorten it.

– Blithering Genius, “Technology and Progress”

Even if biological immortality were possible:

- It would have to require an extensive amount of advanced technology, which will be much less feasible once the scale of civilization and economics declines. Infinite economic growth is impossible.

- Achieving it for all humans would guarantee overpopulation, since there would be no way for populations to go down.

- If humans live forever, then that would probably reduce some incentives to create new humans, which could slow down natural selection.

So, achieving immortality is undesirable. It would be better for everybody to focus on maximizing their own reproduction. Reproduction is how every organism achieves its objective purpose in life.

5.3. Peak Technology

There’s probably a finite amount of good ideas out there to discover, due to the physical materialist nature of the Universe. The opposite belief (i.e. there’s an infinite amount of good ideas) seems less logical. A lot of times, there’s only one best way to do something. A lot of the agricultural methods that were developed thousands of years ago in the world’s great river valleys are still used today. The same could be said about how the wheel, fire, and many other inventions are still used today. Once humanity discovers the best of the best, there’s often not many ways to improve beyond that.

In many ways, humanity has already reached “peak technology”. There’s still room for improvement in many areas, like AI, embryo selection, gene-editing, urban planning, robots and nanobots, and other things, but once we achieve them, there will be even less room for future growth. There are physical and economic limits to what technology can achieve. For instance, it’s very probable that humanity will probably never colonize Mars or others planets, and this is explained in Futurist Fantasies by T. K. Van Allen and The Limits of Space Colonization.

The technological progress that occurred from 1970 to 2020 is much lower than the progress from 1900 to 1970. Technology has clearly slowed down. And while it is still improving in some areas (e.g. artificial intelligence), the rate of progress is going to keep slowing down.

To be clear, I’m not say that no technological process has happened in the last 60 years. My position that progress has slowed down should not be conflated with the belief that no progress has happened at all since 1970. If you compare the technological progress made from 1900 to 1960 to the progress made from 1960 to 2020, then you would expect to see much more technology in the modern world, but you don’t.

When 2001: A Space Odyssey was released in 1968, most people expected that many of the technologies shown in the film would materialize by the 2000s, but that never happened. They were not able to predict that progress would slow down.

Everything in 2001: A Space Odyssey can happen within the next three decades, and…most of the picture will happen by the beginning of the next millennium. – Metro-Goldwyn-Mayer Studios

List of Technologies that never happened by the 2000s: civilian space travel, space stations with hotels, Moon colonization, suspended animation of humans, practical nuclear propulsion in spacecraft, strong artificial intelligence of the kind displayed by Hal, etc.

For the 2000s, it currently seems that embryo selection and AI will probably have the biggest changes on civilization, as of 2025.

Further Reading:

- Technology and Progress.

- The Rise and Stagnation of Modernity.

- Share of United States Households Using Specific Technologies - Our World in Data.

- Scientific Progress is Slowing Down. But Why? - Sabine Hossenfelder.

- Did the Industrial Revolution decrease costs or increase quality?

- Futurist Fantasies by T. K. Van Allen.

5.4. The Irrationality Of Modern Civilization

If I had asked people what they wanted, they would have said faster horses – Henry Ford

And if people guessed that the people of the future (100+ years after the Ford Model T came out) wanted better cars, then they’d be wrong on that too. A rational modern civilization doesn’t want cars. It would rather have high-speed rail and a combination of efficient, sustainable, and low-pollution transpiration methods and infrastructure. 20th-Century Human Civilization failed to figure this out because modern civilization is irrational.

Everybody is using up gasoline and oil these days as if it is water. Unless someone is walking, riding bicycle, or riding a horse, they have to use oil, one of the world’s most valuable and energy-rich resources to travel anywhere that they want to go. Even if people use electrical vehicles, EVs still inherit all the problems of cars and they increase carbon emissions and pollution around the world, which exacerbates climate change and global warming.

The purpose of electric cars was not to save the environment, but rather to save the automobile industry. People won’t be able to drive cars anymore if the world runs out of gasoline, so the theory is that replacing them with electric vehicles instead should cause people to continue buying cars even after global oil depletion happens. Unfortunately, no one with significant political power seems to care about how unsustainable this may be, in terms of unnecessary resource consumption.

I really hate how the world is designed this way. It’s so dystopian to me. The developed world and other societies all around the world could’ve started out by building more sustainable, more time-efficient, and more economically beneficial transportation infrastructure, but they didn’t. Nobody in positions of power bothered to extensively think out how human societies should be organized, so the 20th-century urban planners designed the world’s cities in such a brainless manner. If cars were limited to only practical rural transportation, Humanity also could’ve avoided making a significant amount of lead pollution until leaded gasoline was gradually banned around the world. None of this had to be this way, but it is.

The Three E’s of the Peak Prosperity’s Crash Course were: Economy, Energy, and Environment. Energy is probably the least worrisome, assuming that humanity starts using nuclear energy, since there is no cleaner, abundant energy alternative with a high Energy Return On Investment (EROI). However, nuclear energy can’t power gasoline-powered cars. Electric vehicles will be able to replace some of society’s transportation needs, but they rely on scarce resources that the West doesn’t have enough access to, and all batteries have finite lifespans. Once gasoline becomes expensive enough, most people simply won’t be able to afford to drive unless they have EVs. Global oil depletion will have many devastating consequences on society.

When civilization is as irrational as it is, the Eulavist (and Efilist) perspective on life seem more reasonable, or at least it’s easy to feel sympathetic to them. If humanity is not going to be rational on a wide scale, then why should humanity continue to exist at all if it isn’t going to be much different from all the has been happening before we existed? This kind of implies that rationalism is one of the polar opposites of nihilism and other philosophies that reject life.

6. Modern Civilization And The Future

6.1. The Fall Of Georgism, Eugenics, And Global Government

Somehow, the West went from having walkable cities and being relatively pro-Georgism and pro-eugenics in the early 1900s to car-centric cities, mortgage-based economies, anti-eugenics, and a myriad of social delusions in the early 2000s. It’s sad to think that humanity was on the right track for both Georgism and Eugenics having wide popular support, and nowadays both concepts have low public support, due to collectively ingrained morals and ignorance that prevent the concepts from having greater acceptance. The first half of the 1900s also oversaw both the League of Nations and the United Nations. Both of these attempts would’ve been the perfect time to create a global government, but they both failed because humanity didn’t have the necessary knowledge or the right worldview to implement them.

Now the closest thing we have to a global government is the de facto might makes right geopolitical conflicts between the world’s top superpowers, and the Humanist-infested United Nations that is weak, deluded, and should be burned to the ground. Humanism also become the dominant religion in the Western World until the latter half of the 1900s.

6.2. Modern Civilization Has Been Going Downhill Ever Since The 2000s Started

I’d say that the 80s and 90s were the best time period ever for most of the entire world. The Soviet Union had fallen, most of the world’s economies had ascending economic growth, there was great culture being produced, the Internet was created (most of its negative consequences didn’t happen yet), it still seemed as if there was lots of technological progress to be made, etc.

Everything got so bad after the 1990s: 9-11, The War on Terror, the Great Recession, the rise of China as a superpower, increasing dysgenics and overpopulation, the NSA scandal, the 2015 European migrant crisis, mass cultural insanity, the rise of Humanism, Trump vs Clinton, unprecedented political partisanship, COVID-19 lockdowns, George Floyd Riots, Biden’s presidency, the fall of Afghanistan, Russia invades Ukraine, global recession, decreased standard of living, we’ve almost reached peak technology, the proxy conflicts between Russia and NATO in Africa, worst average mental health ever, the upcoming US debt bubble, the upcoming Chinese Real Estate bubble, etc.

6.3. Why Modern Civilization Is Unlikely To Ever Recover If It Collapses

If modern industrialized civilization collapses, there is no coming back. Humanity climbed up the ladder of easily accessible fossil fuels to create our civilization, and those fossil fuels are now gone. We have kicked away the ladder. To get fossil fuels nowadays, we have to drill down miles under the Earth’s surface, or a mile under the ocean and another mile under the rocks. Coal used to wash up on beaches in England. That doesn’t happen anymore. It’s all gone. The Gulf oil spill of 2010 gives some idea of what we do today to get oil. Enormous amounts of capital were involved to make the Deepwater Horizon drill a mile under the sea and then about three miles down into the rock. Eventually, the world’s supply of fossil fuels will be entirely depleted within the next couple of decades or so. And humanity won’t be able to recreate them because they’re non-renewable resources.

There’s no way to climb back up the ladder of technological complexity without abundant, easily accessible energy. If we fall down that ladder, we’ll have to fall very hard because we have to fall all the way back down. And then the best humanity will be able to do afterward is something like Pre-Modern Europe during the 1600-1700s, a pretty shitty world by modern standards. We’ll never get back what we have now and there will be no coming back, hence why industrial civilization is something worth preserving. If there is even the slightest chance that modern civilization will be irrecoverable if it falls, and we are interested in preserving it, then we should do everything that we can to save it right now.

Historically, technologies tend to die with the civilizations that created them. Without the kind of technology that we currently have, and the kind of complex global economy that we currently have, we can’t produce the energy necessary to run an industrial civilization. Once humanity loses a major level of civilization complexity, it will be impossible to re-achieve it without the energy source that made it possible in the first place (e.g. agriculture, domesticated animals, wind & water mills, fossil fuels, etc). Hence, we can’t maintain or create industrial civilization without industrial civilization. Collapse would be the permanent end of the industrial revolution and the modern world.

In a sense, collapse would be the end of the human experiment, i.e. it would mean the human experiment has failed. Humans will continue to exist, but only by going through endless cycles of famine, war, disease, and famine, war, disease. Or in other words, population overgrowth and collapse, and population overgrowth and collapse. We’ll never have this again. We’ll never have technology again. We’ll never have computers again. We’ll never have spaceships again. That’s all going to be over. So it’s important to understand that.

– Blithering Genius, Collapse is Forever

Video: Energy is the Mother of Invention.

Also see: The Future of Energy should be Mostly Nuclear.

6.4. Predictions For World After Global Oil Depletion

Once gasoline becomes expensive enough, most people simply won’t be able to afford to drive unless they have EVs. Unfortunately, nuclear energy can’t power gasoline-powered vehicles. Once most of society can no longer afford to drive cars, trucks and planes, we can predict that the following will happen:

- All prices in the economy will increase drastically, as they always do when gasoline prices rise.

- All the infrastructure that relies on gasoline-powered vehicles will fall apart.

- The Internet (or parts of it) could collapse if the infrastructure for sustaining it collapses it as well.

- If the Internet remains intact, then work from home will become the norm for jobs that used to require people to travel long distances.

- Everybody will have to start living by more local means (people can no longer buy things from so far away). The world will feel much bigger than it did around the late 1900s and early 2000s.

- Bicycles and horses & carriages will become the dominant forms of local transportation in many places.

- Electric vehicles will probably be used in wealthier places that could or can afford to buy EVs and have sufficient EV infrastructure.

- Gasoline-powered plane flights will no longer occur.

- Some infrastructure will switch to using coal and other fossil fuels, wherever it’s feasible, and while those resources still remain. Burning solid fossil fuels is less efficient.

- Rail lines will become the only low-energy consumption method of transportation for long distance travel over land. High speed rail should be feasible, assuming that countries invest the necessary infrastructure and embrace nuclear energy.

- People will have to start using ships to travel between continents, similarly to the norm before WWII.

- Airships may also make a comeback if their technological challenges can be overcome. There would certainly be a greater economic demand for airships if gasoline powered planes are no longer viable.

- Greenhouse gas emissions will probably decrease worldwide once the world’s entire affordable gas supply is used up, thus causing global temperatures to decelerate while still increasing. However, the increase in coal consumption might counter this.

Perhaps the world will modify the atmosphere to cool the Earth to an extent, but there are no guarantees. This also won’t decrease the ocean’s acidity, and it may not be enough to prevent the phytoplankton that the entire ocean ecosystem (and a substantial amount of humanity’s food supply) depend on.

If oil is currently being used to get more oil, then perhaps once the EROI get down to 1:1, it might become more practical to build oil mining infrastructure that can use nuclear energy to mine oil instead. Obviously, this would be an inefficient use of energy compared to what could’ve been done, but it still may be a good strategy for temporarily keeping the worlds gasoline powered vehicles moving since it would convert nuclear energy into oil at the cost of exhausting both the world supply of nuclear fuel and oil. If it gets bad enough, then the amount of nuclear energy that we invest into mining, the remaining oil out of the Earth would be greater than the energy that’s inside the oil that we’re mining.

6.5. What It Would Be Like To Restart Modern Human Civilization If It Falls

Instead of a sudden collapse, humanity will most likely experience a long decline, with some shocks that take us down to lower levels of complex civilization quickly, but not all the way down. It will probably be like a series of steps, and it will differ by region. There are a lot of unknowns. We cannot predict the future in the sense of predicting a sequence of events, but we do know that the current system is unstable, and so we can predict that it will collapse, unless it is reformed in certain ways.

Annual heat waves will become more widespread, record breaking temperatures will happen year after year, and many regions will become uninhabitable. This will cause the world population to decline, unless the world can prevent this by somehow altering the atmosphere’s composition to block out more sunlight and bring the Earth back to previous temperatures.

If civilization falls and humanity attempts a Second Industrial Revolution, it will have many differences compared to the path that humanity took that lead to the Industrial Revolution in the 1800s and 1900s:

- Fossil fuels will be all gone, especially all the ones that were the easiest to access. If any do remain, they will be difficult to access.

- Many cities and societies destroyed by wars, due to overpopulation.

- Humanity will have a deteriorated human genome.

- Fully white people will be very rare across the world since the Great Replacement would have depleted the Earth’s European population.

- There may be more human genetic diversity in every region around the world due to all the race-mixing that occurred during the 2000s. This could have some positive benefits, but it would probably be a temporary barrier to restarting modern civilization since it would cause populations all around the world to be less adapted to their pre-industrial environments.

- More violent genes would be selected for during and after the Great Collapse since those genes would be more adaptive in an environment where the tremendous cooperation that once held Civilization together has completely ceased on the scale that it once did. This would make it more difficult to restart Civilization.

- Many viral and bacterial diseases that had previously been eradicated from the human population, like polio, tuberculosis, measles, small pox, etc may return if modern vaccinations are no longer possible.

- If diseases continue to exist worldwide to some extent or another and that humanity is unable to sufficiently evolve to it without vaccination, then human populations worldwide will be limited by disease instead of war. This would be somewhat more preferable since war entails violence, the destruction of property, increased rape, and other consequences that are more unfavorable than the ones entailed by sufficiently widespread disease.

- Humans have already undergone the encephalization process historically, so even if all the biggest game animals are all gone, this wouldn’t be a problem as long as a stable food source that provides all the necessary nutrients is available to some degree.

- Humans may be more likely to act differently, if they could somehow quickly access and obtain some of the knowledge humanity used to have before the Great Collapse (the reverse Industrial Revolution) occurred.

- This knowledge could enable the humans that rediscovered that historical knowledge to act in ways that might avoid some of history’s greatest mistakes.

- Humans would have to live in a world of considerably less diversity in flora and fauna, due to the Sixth Recorded Mass Extinction Event.

- The Great Exchange between the Old World and the New World has already happened, so Old World and New World flora, fauna, and crops would still be present on all the continents as long as the people living in their respective places continue to cultivate them.

- All the nuclear power plants would melt down since they could no longer be maintained, if any were still kept running during the Great Collapse.

Footnotes:

Of course, the notion of “evil” depends on perspective, and humans often do evil things naturally out of necessity for survival. But the point still stands that nurture has large effects on how people learn to behave. Most people don’t usually change their behavior unless they start to question assumptions about how they should behave, or unless their circumstances make it necessary to adapt to new circumstances.